通过Notion查看本文

本文同步发布在j000e.com

The Ultimate FLUX.1 Hands-On Guide: From Beginner to Advanced with LoRA and ControlNet

Learn to Use FLUX.1: The Unlimited Image Generator Behind Elon Musk's Grok 2

Introduction

In this article, we will guide you step-by-step on how to use FLUX.1.

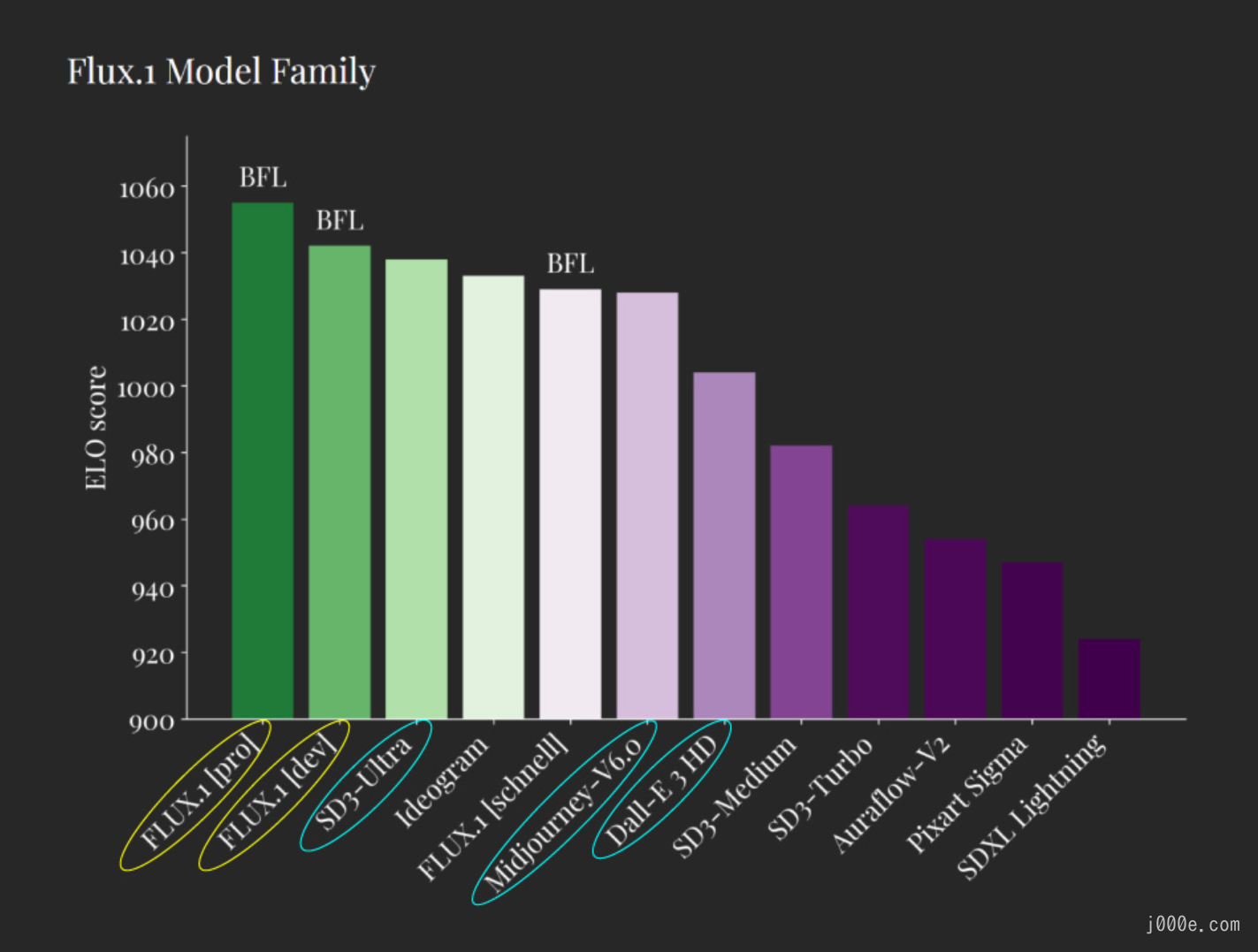

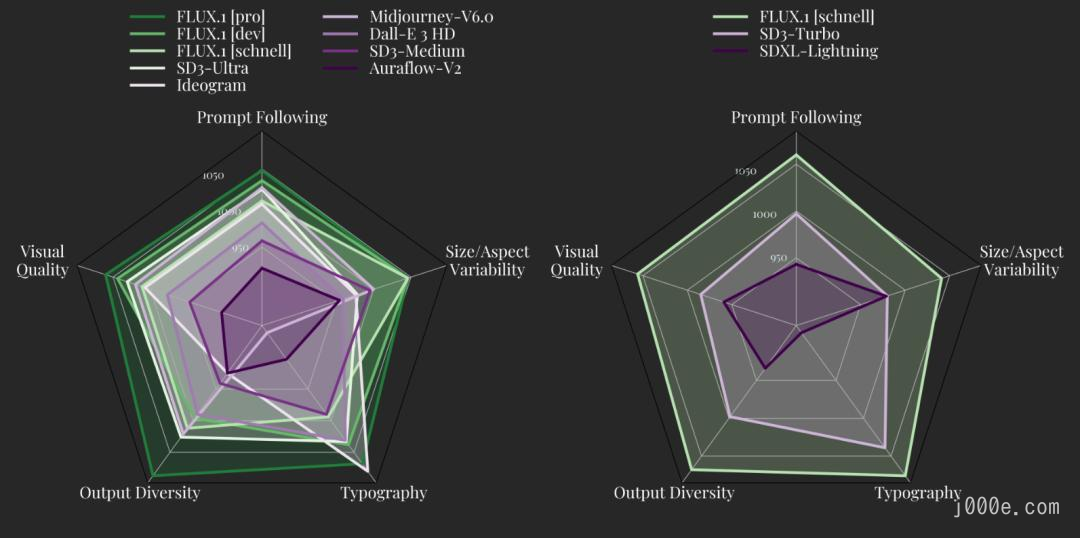

The open-source community has welcomed a new text-to-image generation model, FLUX.1, following the releases of SD 3 Medium and Kolors. Developed by former core members of Stability AI, FLUX.1 significantly surpasses the quality of SD 3 and even rivals the closed-source Midjourney v6.1 model. This positions FLUX.1 as a new benchmark in AI-generated art and injects fresh momentum into the development of open-source AI art.

The company behind FLUX.1 is Black Forest Labs, founded by the original team behind Stable Diffusion and several former researchers from Stability AI. Like Stability AI, Black Forest Labs is dedicated to developing high-quality multimodal models and making them open-source. The company has already completed a $31 million seed funding round.

Black Forest Labs website: https://blackforestlabs.ai/

FLUX.1 offers three versions: Pro, Dev, and Schnell. The first two models outperform mainstream models like SD3-Ultra, while the smaller FLUX.1 [schnell] surpasses larger models such as Midjourney v6.0 and DALL·E 3.

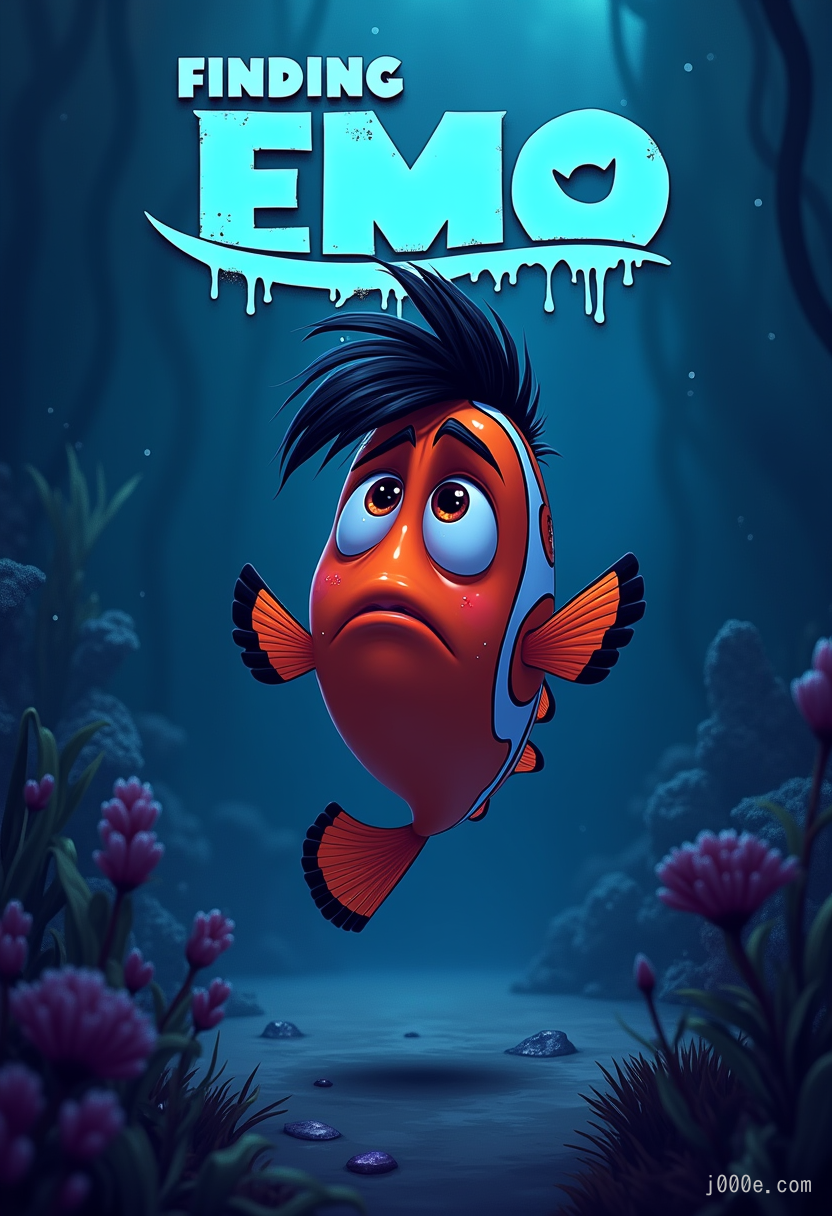

FLUX.1 excels in text generation, following complex instructions, and rendering human hands. Below are examples of images generated by its most powerful model, FLUX.1 [pro]. As you can see, even when generating large blocks of text or multiple characters, there are no errors in details such as text or human hands.

Try FLUX.1 Online For Free

Here are some websites where you can experience the FLUX.1 model online. If you just want to explore and use this powerful text-to-image model, free online access is the best option for you.

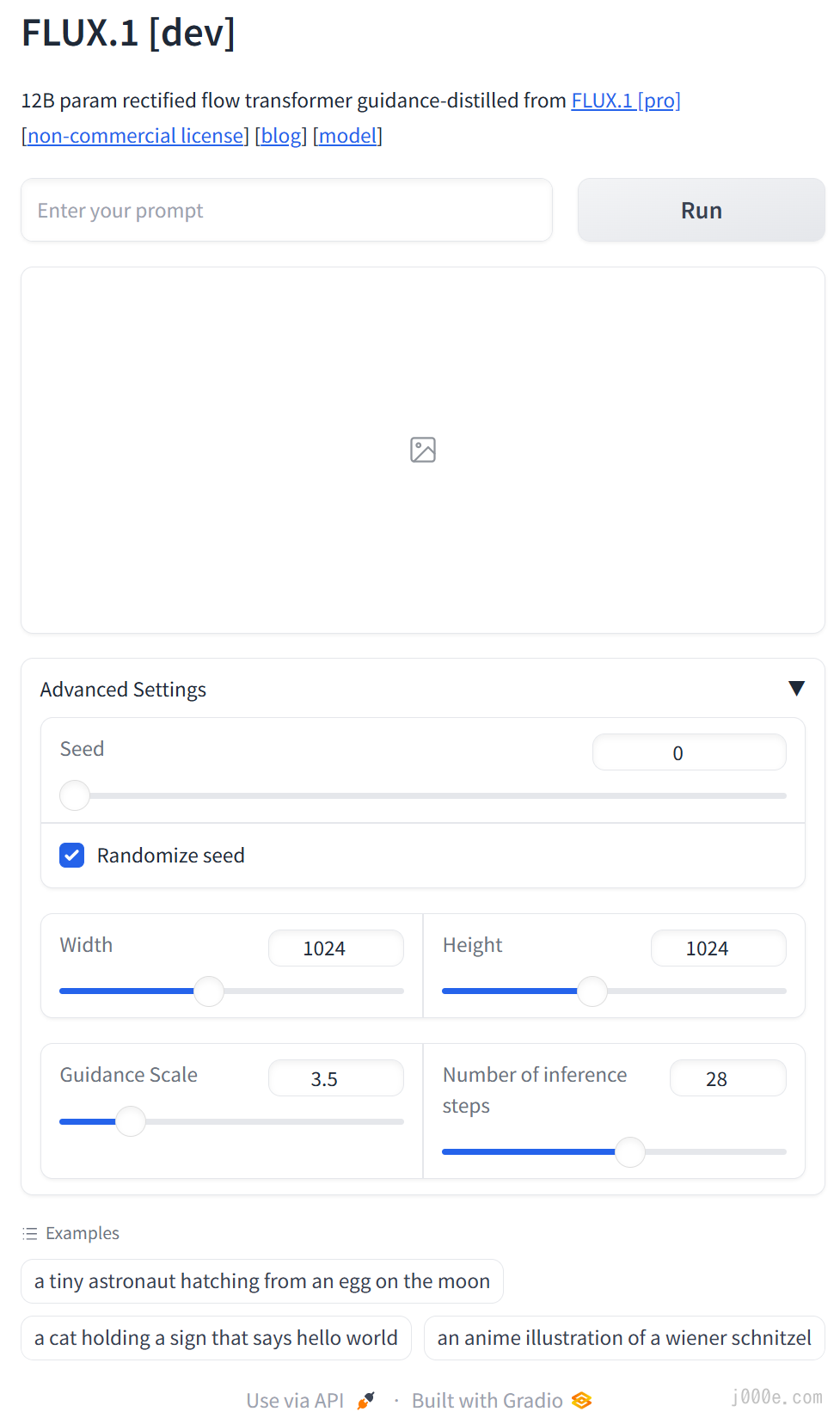

Hugging Face (Free | Limited Usage Frequency)

FLUX.1-dev:

https://huggingface.co/spaces/black-forest-labs/FLUX.1-dev

FLUX.1-schnell:

https://huggingface.co/spaces/black-forest-labs/FLUX.1-schnell

User Interface:

Note: Currently, FLUX.1 schnell has few usage restrictions, but after generating multiple images with FLUX.1 dev, there will be a cooldown period before you can use it again.

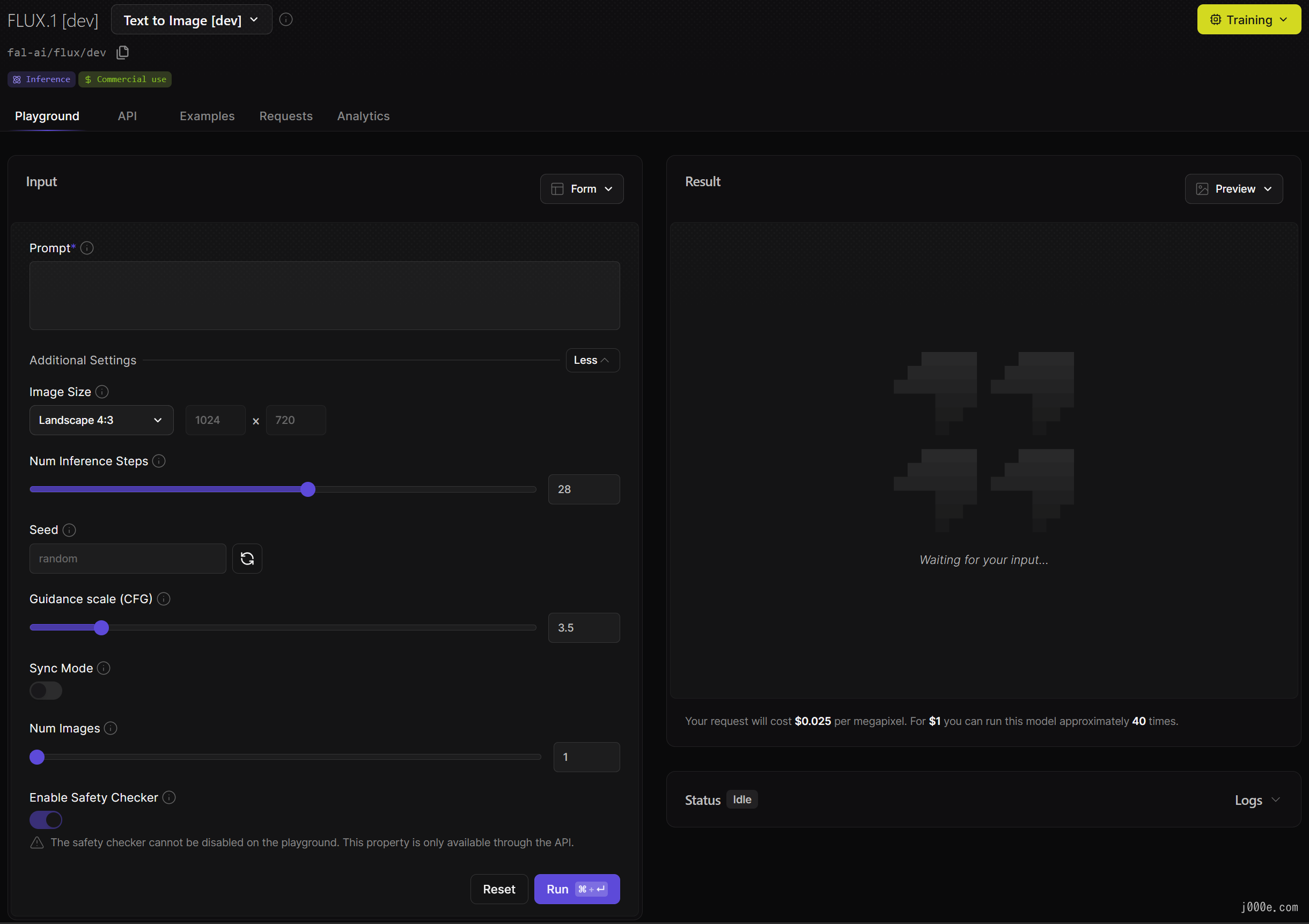

fal.ai (Free | $1 Free Credit)

FLUX.1 schnell:

https://fal.ai/models/fal-ai/flux/schnell/playground

FLUX.1 dev:

https://fal.ai/models/fal-ai/flux/dev/playground

FLUX.1 pro:

https://fal.ai/models/fal-ai/flux-pro

After logging in with GitHub, you will receive $1 in credit, which allows you to use FLUX.1 pro 20 times, FLUX.1 dev 40 times, or FLUX.1 Schnell 333 times for free.

Here is the pricing table for fal.ai:

| Model Name | Unit Price (USD) | |

|---|---|---|

| FLUX.1 [dev] | 0.025 per megapixel | Billed by the size of generated images. |

| FLUX.1 [schnell] | 0.003 per megapixel | Billed by the size of generated images. |

| FLUX.1 [pro] | 0.05 per megapixel | Billed by the size of generated images. |

| Stable Diffusion 3 - Medium | 0.035 per image | |

| Stable Video | 0.075 per video | |

| Face Swap | 0.001 per image |

User Interface:

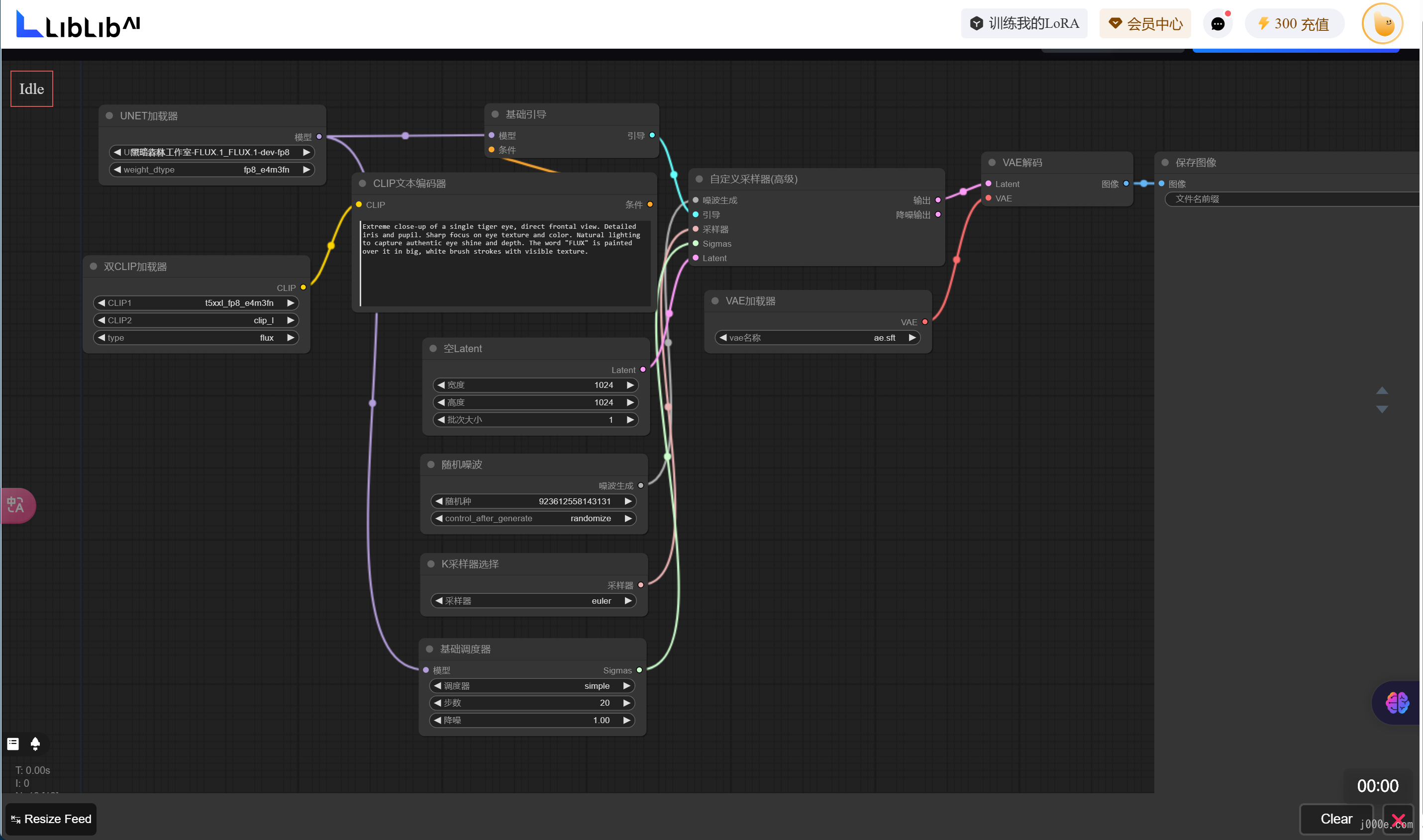

LiblibAI (Free 300 Points | Chinese Website)

LiblibAI offers two ways to use FLUX.1, allowing approximately 15 free uses with the 300 points provided.

Method 1: Web UI (Each image consumes 20 points)

Currently, the site offers four versions:

- dev-fp8 version: The most recommended version, fast and stable.

- dev version: Also known as the fp16 version, slightly slower to load and prone to running out of memory.

- schnell version: Turbo version, with average performance; not recommended.

- VAE version

Recommended settings for using the WEBUI:

- Sampling method: Only FlowMatchEuler is supported. Embedding is compatible with SD1.5, but there may be issues with SDXL.

- CFG official recommendation: 3.5.

- Sampling steps: The default is 20, which can be increased as needed.

Method 2: ComfyUI (Each image consumes 5 points)

The regular version requires at least 32GB of system RAM. Testing shows that a 4090 GPU can fully occupy its memory. The dev-fp8 version is recommended for local use.

User Interface:

replicate (Not Free)

https://replicate.com/black-forest-labs

replicate offers three FLUX.1 models: FLUX.1-dev, FLUX.1-pro, and FLUX.1-schnell, all of which are paid.

Pricing table:

| Model | Output |

|---|---|

| black-forest-labs/flux-dev | $0.030 / image |

| black-forest-labs/flux-pro | $0.055 / image |

| black-forest-labs/flux-schnell | $0.003 / image |

| stability-ai/stable-diffusion-3 | $0.035 / image |

User Interface:

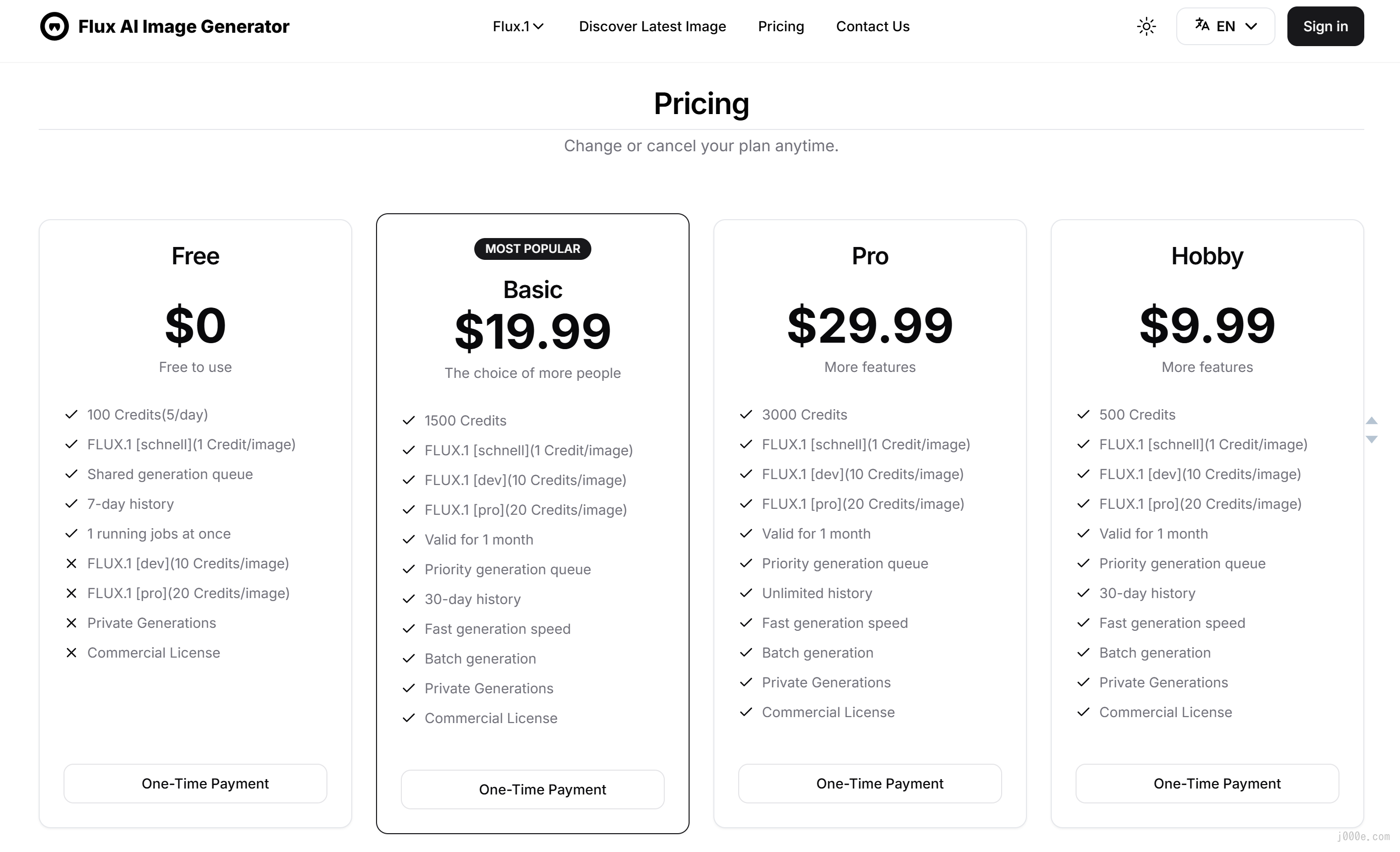

Flux AI Image Generator (Only FLUX.1 schnell Available for Free | 100 Free Points)

https://fluxaiimagegenerator.com/

Currently, only the FLUX.1 schnell version is available for free, and images generated for free must be publicly shared. Upon registration, you receive 100 free points, with 5 points available for use each day. Each image generated consumes 1 point.

Pricing table:

User Interface:

mystic (Free)

https://www.mystic.ai/black-forest-labs

Offers FLUX.1-pro, FLUX.1-dev, and FLUX.1-schnell.

User Interface:

Install ComfyUI

The official CoomfyUI GitHub Repository provides a basic guide for installation methods, including Windows, Mac, Linux, and Jupyter Notebook.

In this article, we will demonstrate the installation and usage process step by step, using a Windows system with an Nvidia GPU as an example.

Hardware Requirements:

| Component | Requirement |

|---|---|

| GPU | At least 4GB VRAM, NVIDIA graphics cards are recommended, preferably RTX3060 or higher. |

GPUs with less than 3GB VRAM can run with the --lowvram option, but performance may decrease. | |

| CPU | Can run on CPU, but the speed is slower, use the --cpu option. |

| Memory | At least 8GB of system memory is recommended. |

| Operating System | Supports Windows system. |

| Provides Mac installation instructions for Macs with Apple Silicon. | |

| Storage | It is highly recommended to use an SSD to speed up the loading and running of model files, and at least 40GB of hard disk space is recommended. |

| Software Dependencies | Python environment is required, but a Python environment is also embedded in the installation package. |

Python libraries such as torch, transformers, etc., need to be installed. | |

| Some plugins may require Git for installation. | |

| Note | Specific requirements may change with updates to ComfyUI, it is recommended to check the official documentation. |

ComfyUI Official Repository Address: ComfyUI's Github Repository

You can find the blue link of Direct Link to download in the ReadMe section of the repository, click to download the integrated package organized by the official.

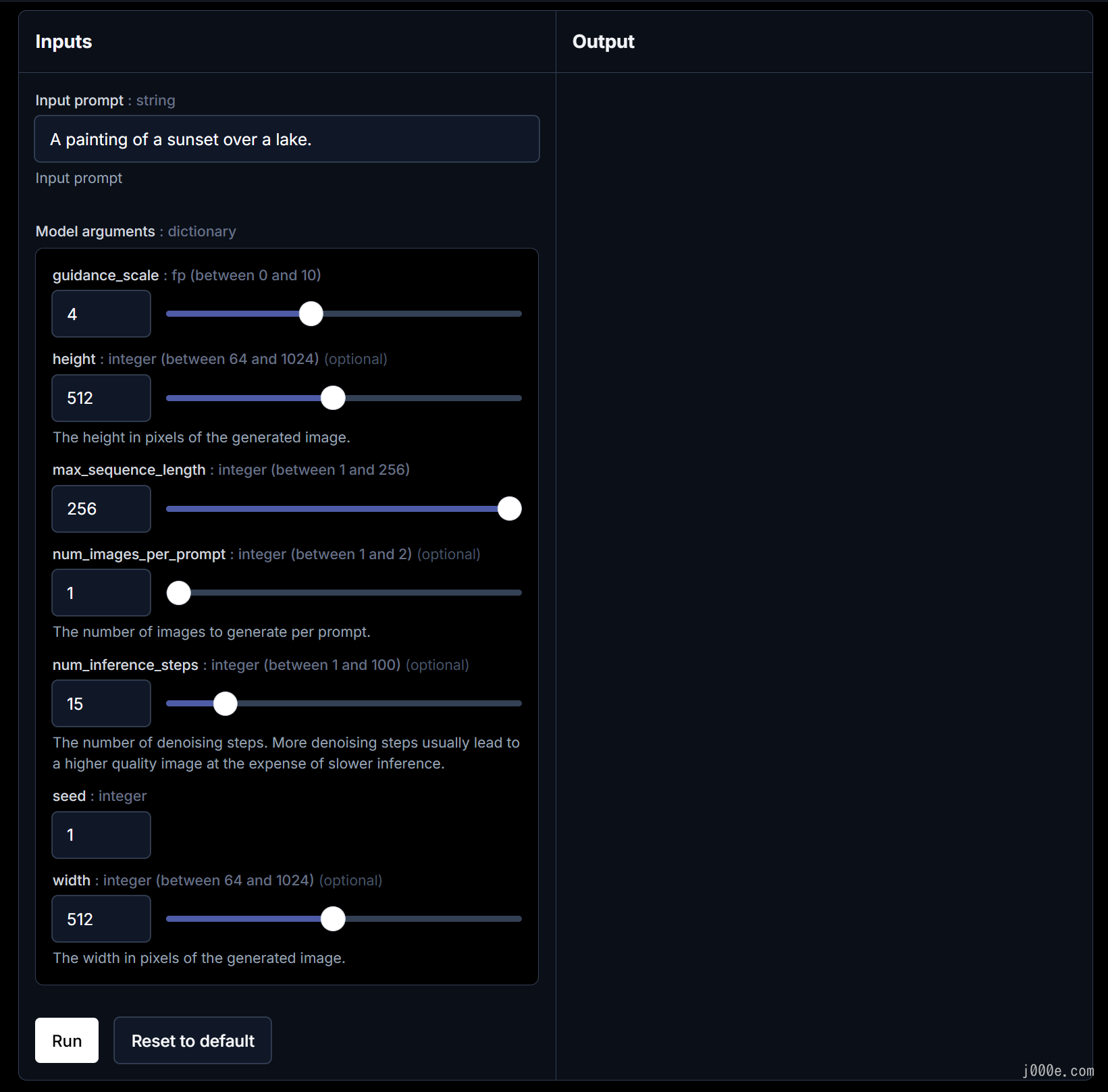

Unzip the integrated package to the desired local directory for ComfyUI installation. (I recommend using 7-Zip for dealing with compressed files). The file directory should look like this after decompression:

Explanation of ComfyUI Directory:

ComfyUI_windows_portable

├── ComfyUI // Main folder for Comfy UI

│ ├── .git // Git version control folder, used for code version management

│ ├── .github // GitHub Actions workflow folder

│ ├── comfy //

│ ├── 📁 comfy_extras //

│ ├── 📁 custom_nodes // Directory for ComfyUI custom node files (plugin installation directory)

│ ├── 📁 input // ComfyUI upload folder, when you use nodes like load image, the corresponding uploaded images will be stored in this folder

│ ├── 📁 models // Corresponding model file configuration folder

│ | ├── 📁 checkpoints // Path for storing large model checkpoint files

│ | ├── 📁 clip // Path for storing CLIP files

│ | ├── 📁 clip_vision // Path for storing CLIP_vision files

│ | ├── 📁 configs

│ | ├── 📁 controlnet // Path for storing ControlNet model files

│ | ├── 📁 diffusers

│ | ├── 📁 embedding // Path for storing embedding model files

│ | ├── 📁 gligen

│ | ├── 📁 hypernetworks // Path for storing hypernetwork models

│ | ├── 📁 loras // Path for storing Lora model files

│ | ├── 📁 style_models

│ | ├── 📁 unet

│ | ├── 📁 upscale_models // Path for storing upscale model files

│ | ├── 📁 vae // Path for storing VAE model files

│ | └── 📁 vae_approx

│ ├── 📁 notebooks

│ ├── 📁 user // ComfyUI user information (such as configuration files, workflow information, etc.)

│ ├── 📁 output // ComfyUI image output folder, when using nodes like save image, the generated images will be stored in this folder

│ ├── extra_model_paths.yaml.example // Extra model file path configuration file, if you set this, please remove the .example suffix and edit with a text editor

│ └── ... // Other files

├── 📁 config // Configuration folder

├── 📁 Python_embeded // Embedded Python files

├── 📁 update

│ ├── update.py // Python script for ComfyUI

│ ├── update_comfyUI.bat // The batch command recommended by the author of ComfyUI to upgrade ComfyUI

│ └── update_comfyui_and_python_dependencies.bat // Only run this batch command when there are issues with your Python dependency files

├── comfyui.log // Comfy UI runtime log file

├── README_VERY_IMPORTANT.txt // README file, includes methods and explanations for file usage, etc.

├── run_cpu.bat // Batch file, double-click to start ComfyUI when your graphics card is an A card or you only have a CPU

└── run_nvidia_gpu.bat // Batch file, double-click to start ComfyUI when your graphics card is an N card (Nvidia)Let's take a look at the differences between various versions of the model and download the one we need.

Different Versions of FLUX.1

Here is a detailed introduction to the different versions of the FLUX.1 model (This section is meant to help you understand the differences between the models; the next section will provide download instructions):

| Feature/Version | Flux.1 Pro | Flux.1 Dev | Flux.1 Schnell |

|---|---|---|---|

| Overview | Cutting-edge performance in image generation with top-notch prompt following, visual quality, image detail, and output diversity. | Open-source model with quality and prompt adherence similar to Pro, more efficient for users with GPUs. | Open-source model, the fastest option for local development and personal use, with quick response and low configuration requirements. |

| Visual Quality | Top-tier | Similar to Pro | Good |

| Image Detail | Top-tier | Similar to Pro | Good |

| Output Diversity | High | Medium | Medium |

| Prompt Adherence | High | Medium | Medium |

| Hand Detail Optimization | Yes | Yes | Yes |

| Pricing (Per Image) | $0.055 | API: $0.03, Free download | API: $0.003, Free download |

| License Type | Enterprise solutions, API only | Open-source, FLUX.1-dev Non-Commercial License | Apache2.0, Commercial use allowed |

| Model Download | Not available for download, API access only [FLUX.1 [pro] API](http://docs.bfl.ml/)、Flux.1 Pro Replicate API、Flux.1 Pro FAL AI API、 Flux.1 Pro Mystic AI API | Available for download Flux.1 Dev GitHub repository、Flux.1 Dev Hugging Face、Flux.1 Dev Replicate API、Flux.1 Dev FAL AI API、Mystic AI | Available for download Flux.1 Schnell GitHub repository、Flux.1 Schnell on Hugging Face、Flux.1 Schnell Replicate API、 Try Flux.1 Schnell on FAL AI、Flux.1 Schnell Mystic AI API |

| Use Case | Professional use, enterprise customization | Development and personal use | Personal and commercial use |

For more information, visit the FLUX.1 repository: https://github.com/black-forest-labs/flux

Download FLUX.1 Model

You can choose to download the official original model or the quantized model. Select the one that suits your needs from the options below.

5 Mainstream FLUX.1 Model Versions

| Category | Version | Details |

|---|---|---|

| Black Forest Labs Official Models | FLUX.1-dev | Not for commercial use |

| FLUX.1-schnell | For commercial use. Supports ControlNet and LoRa, 16GB or more GPU memory | |

| FP8 Versions Released by @Kijia | flux1-dev-fp8.safetensors | |

| flux1-schnell-fp8.safetensors | 8GB GPU memory available, suitable for original workflow, supports ControlNet and LoRa | |

| FP8 Versions Released by ComfyUI | flux1-dev-fp8.safetensors | |

| flux1-schnell-fp8.safetensors | 8GB GPU memory available, integrates with CLIP and VAE, suitable for simplified workflow, supports ControlNet and LoRa | |

| NF4 Quantized Versions Released by @Illyasviel | flux1-dev-bnb-nf4.safetensors (v1 / v2) | 6GB GPU memory available, integrates with CLIP and VAE, suitable for simplified workflow, supports LoRa and ControlNet |

| GGUF Quantized Versions Released by @City96 | Q8 / Q5 / Q4 | Q8: Best performance, highest GPU memory usage, lowest latency available for Q4 |

| WebUI Forge supports GGUF Q8/Q5/Q4 | ||

| ComfyUI supports and integrates with ComfyUI-GGUF plugins | ||

| 8GB GPU memory available, suitable for original workflow, supports ControlNet and LoRa |

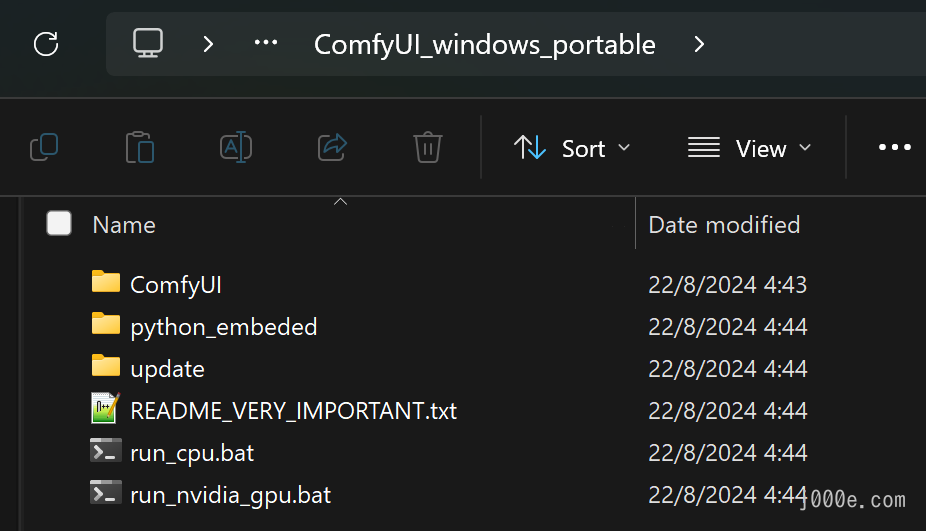

Place the downloaded large model into the ComfyUI\models\unet directory.

- If you have 16GB or more of VRAM, the official models are undoubtedly the best choice, offering the best performance and image quality.

- Next are the FP8 models, which are significantly smaller than the original models and can run on 8GB VRAM, with no noticeable decline in critical text and detail generation quality.

- Then there are the NF4 quantized versions released by @lllyasviel. These models are even smaller and faster to generate images. They can run on 6GB VRAM when using the shared mode in WebUI forge. There are two versions, v1 and v2, with v2 offering better detail and speed.

- Finally, there are three GGUF quantized versions developed by @City96. The Q8 version offers better image output and speed than FP8, requiring more than 12GB of VRAM; the Q4 version can run on 8GB VRAM and provides slightly better generation quality than NF4.

- Note: The usage licenses for these quantized versions are consistent with the original models, i.e., Dev is not for commercial use, while Schnell is for commercial use.

FLUX.1 fp8 Version By Kijai

For most users, running the official model smoothly can be quite a challenge. Here, we can use the optimized version of the FLUX.1 model by other authors that may provide a better experience.

https://huggingface.co/Kijai/flux-fp8/tree/main

| Version Name | VRAM Requirement | Download Link |

|---|---|---|

| FLUX.1 [dev] fp8 | Minimum 12GB VRAM | Download Here |

| FLUX.1 [schnell] fp8 | May lower than 12GB VRAM. | Download Here |

Here, I choose to download the FLUX.1 [schnell] fp8 for testing. You can download the model as needed.

- Downloaded the

flux1-schnell-fp8.safetensors - Place downloaded model files in

ComfyUI/models/unet/folder

What does “fp” mean?

Quantization is a technique used to reduce the size and computational requirements of machine learning models, particularly large language models. By converting high-precision parameters (like 32-bit floating-point numbers) into lower precision formats (such as 8-bit integers), quantization helps to decrease memory usage and accelerate inference without significantly compromising model accuracy. This process is crucial for deploying large models on resource-constrained devices, such as mobile phones or edge devices, enabling faster and more efficient performance. Quantization is often employed in various stages, including post-training quantization and quantization-aware training, to achieve an optimal balance between model efficiency and accuracy.

What is UNet model?

The UNet model is a type of convolutional neural network (CNN) designed primarily for image segmentation, where the goal is to classify each pixel in an image. It features a symmetric encoder-decoder structure: the encoder captures contextual information by progressively downsampling the input image, while the decoder reconstructs the image with precise pixel-level details through upsampling. The model's key innovation is the use of skip connections that link corresponding layers in the encoder and decoder, enabling it to retain and combine both high-level and low-level features, which is crucial for accurate segmentation and other pixel-wise prediction tasks.

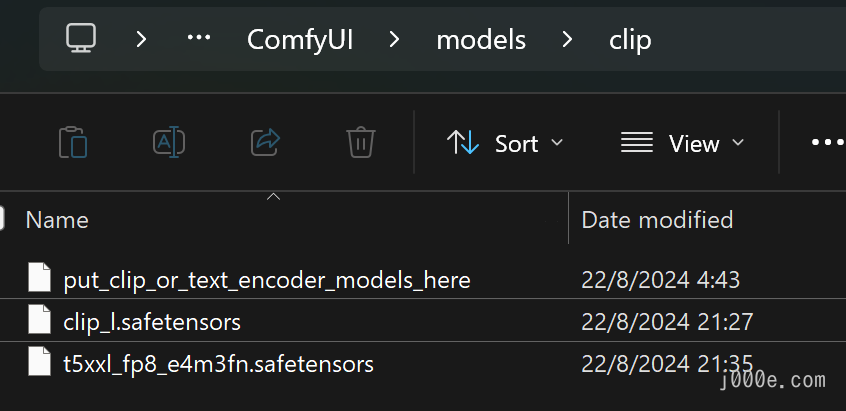

Download ComfyUI CLIP Models

ComfyUI flux_text_encoders on hugging face

| Model File Name | Size | Note | Link |

|---|---|---|---|

clip_l.safetensors | 246 MB | Download | |

t5xxl_fp8_e4m3fn.safetensors (Recommended) | 4.89 GB | For lower memory usage (8-12GB) | Download |

t5xxl_fp16.safetensors | 9.79 GB | For better results, if you have high VRAM and RAM(more than 32GB ram). | Download |

- Download

clip_l.safetensors - Download

t5xxl_fp8_e4m3fn.safetensorsort5xxl_fp16.safetensorsDepend on your VRAM and RAM - Place downloaded model files in

ComfyUI/models/clip/folder.

Note: If you have used SD 3 Medium before, you might already have the above two models

What is CLIP?

ComfyUI Clip is an open-source pre-trained large model based on M6 by Alibaba, focusing on natural language processing tasks such as text understanding, code analysis, and generation. In this context, "semantic segmentation" typically refers to the model's ability to understand and identify the meaning of different parts of the input text, such as parsing document structures or identifying elements like functions and variable names in code blocks. ComfyUI Clip leverages deep learning technology to efficiently extract key information from texts, supporting customized tasks across various application scenarios.

CLIP (Contrastive Language-Image Pretraining) is a pre-trained vision-language model released by OpenAI in 2021. This model learns from a vast amount of internet images and text pairs through unsupervised learning, enabling it to understand image content and associate it with natural language descriptions even without domain-specific labeled data. The core idea of the CLIP model is to map images and texts into a shared high-dimensional vector space, so that similar text descriptions and corresponding images are closer in this space.

In semantic segmentation tasks, CLIP can serve as a feature extractor, generating a contextually relevant semantic map for input images based on text descriptions. This capability is useful in fields such as image understanding and autonomous driving. Users can leverage its cross-modal abilities to guide segmentation tasks; for example, by inputting a text about "cats" and then guiding how to distinguish a cat's face from other parts.

What is T5XXL?

T5XXL is the largest variant of the Text-To-Text Transfer Transformer (T5) model, designed by Google for a wide range of natural language processing tasks. With billions of parameters, T5XXL excels in tasks such as translation, summarization, question-answering, text generation, and even code completion by framing all tasks as text-to-text problems. Its massive size allows it to capture complex patterns and nuances in language, making it one of the most powerful models in the T5 family. Despite its resource-intensive nature, T5XXL is widely used in research and industry for applications that demand high accuracy and sophisticated language understanding, particularly in areas where nuanced language comprehension is crucial.

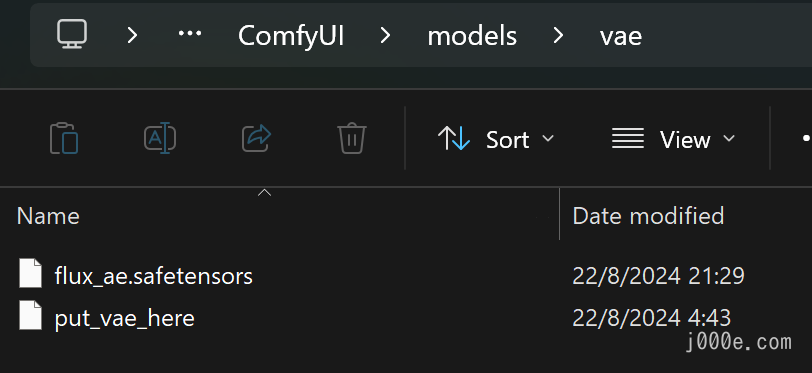

Download FLUX.1 VAE Model

FLUX.1-schnell on hugging face

| File Name | Size | Link |

|---|---|---|

ae.safetensors | 335 MB | Download |

- Downloaded the

ae.safetensorsmodel. - Place downloaded model files in

ComfyUI/models/vaefolder. - For easy identification, you can rename it to

flux_ae.safetensors;

What is VAE model?

A Variational Autoencoder (VAE) is a type of deep learning model used to generate new data similar to a given dataset. It works by compressing input data into a lower-dimensional latent space represented as a probability distribution, then reconstructing the original data by sampling from this distribution. This allows the VAE to create diverse outputs, making it useful for tasks like image generation, data imputation, and anomaly detection. By learning the underlying distribution of the data, VAEs can generate new, similar data points in a structured and probabilistic manner.

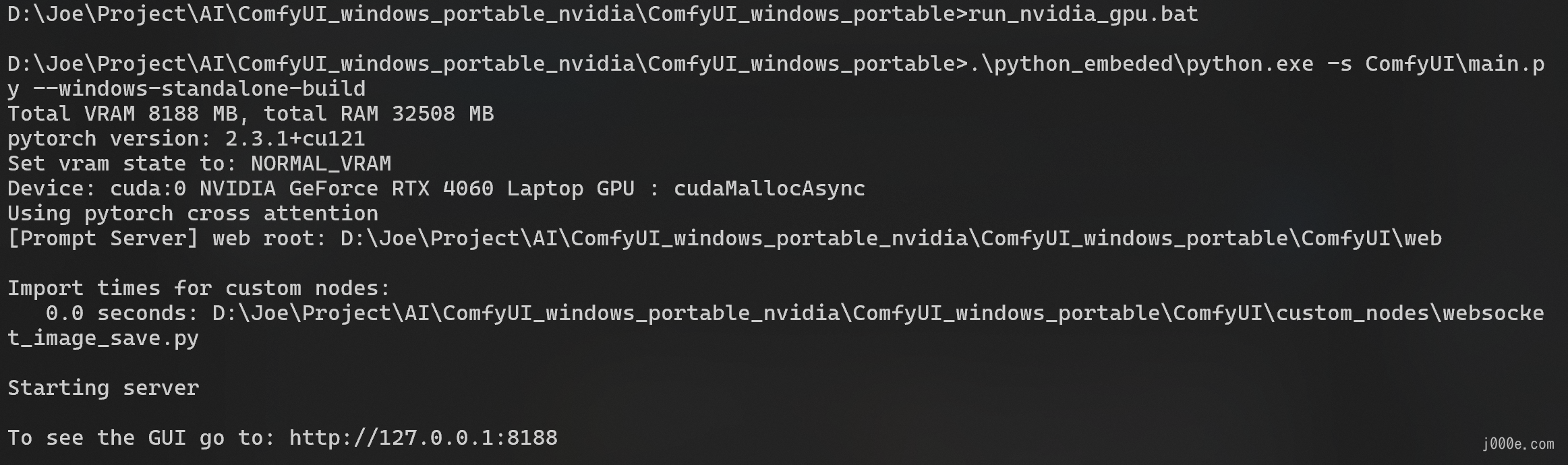

Run FLUX.1 On ComfyUI

In the ComfyUI_windows_portable directory, you can see two files run_cpu.bat and run_nvidia_gpu.bat, you can choose to run according to the following instructions.

ComfyUI_windows_portable

├── ...Other files are omitted

├── run_cpu.bat // Double-click to run it to start ComfyUI when your graphics card is A card or only CPU

└── run_nvidia_gpu.bat // Double-click to run it to start ComfyUI when your graphics card is N card (Nvidia)If you encounter the following error:

PermissionError: [Errno 13] error while attempting to bind on address ('127.0.0.1', 8188): an attempt was made to access a socket in a way forbidden by its access permissionsYou can try opening CMD with administrator privileges and executing the following command:

net stop winnat

net start winnatThen run the startup script again.

As shown in the figure above, when you see “To see the GUI go to: http://127.0.0.1:8188”

It means that ComfyUI has been successfully launched. If the browser does not start normally, you can directly access the website after To See the GUI go to: through the browser, such as in the example above, it should be http://127.0.0.1:8188.

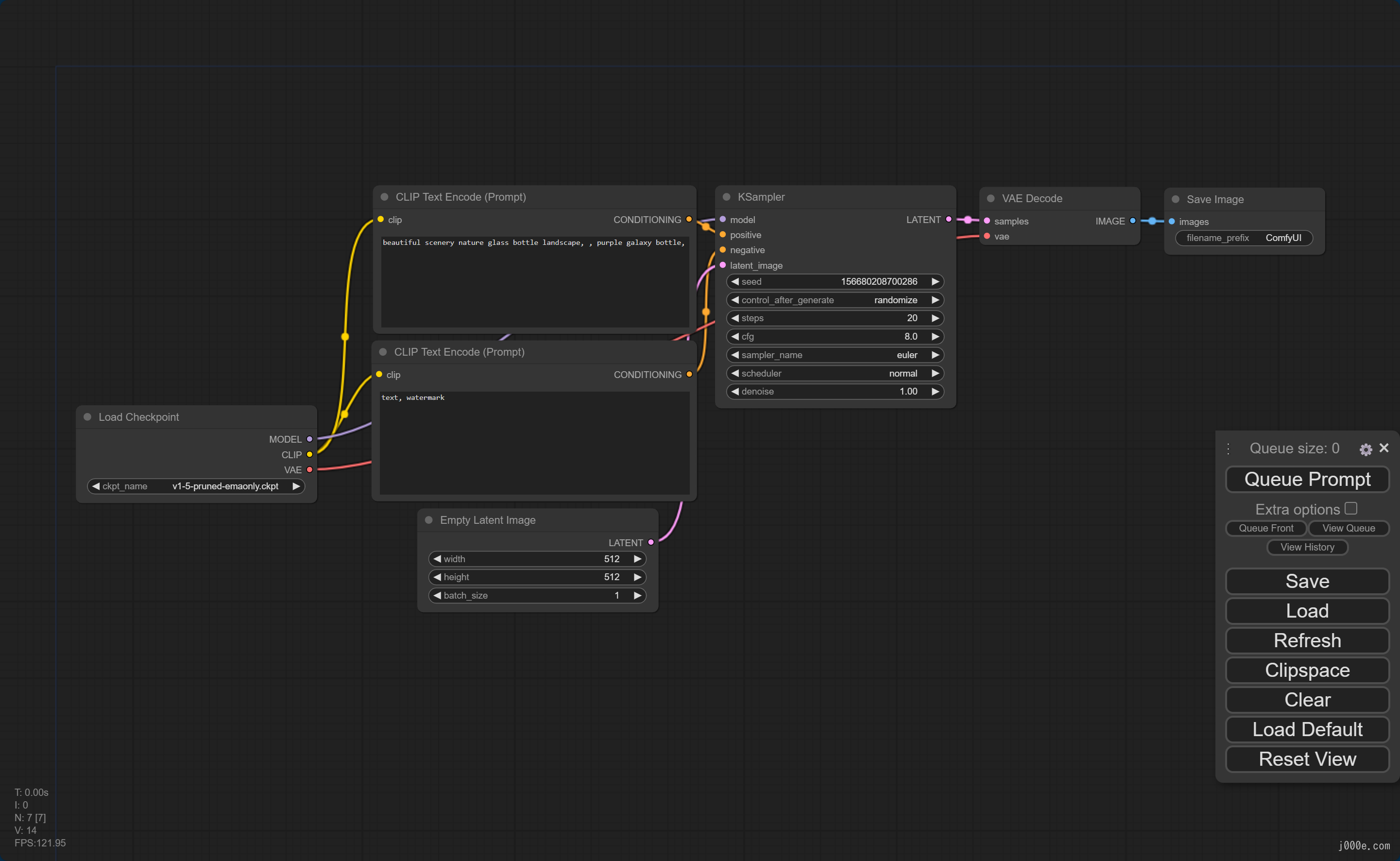

The opened webpage is as follows:

This is the default workflow. Let's leave the webpage open for now without closing anything and proceed to the next steps.

Select FLUX.1 ComfyUI Workflow Example

Building workflows in ComfyUI is a process that requires significant time and learning. If you prefer a more hands-on and ready-to-use experience, using a template is the best option. This article won't delve into how to build workflows but will provide some examples that you can directly run.

Choose the workflow example you need from below, copy the code, and save it as a json file (you can paste it into a txt, save it, and then change the file extension from txt to json). If you're unsure which one to choose, you can select the first one.

Go back to the workflow interface webpage you previously opened (if you haven't opened it yet, please refer to the previous section). On the main interface, click the load button on the right side to load the workflow file.

Later in the article, we'll also include ready-to-use workflows for LoRA and ControlNet.

Find more workflow examples at: https://openart.ai/workflows/all?keyword=flux

FLUX.1 Dev ComfyUI Workflow Example

{"last_node_id":37,"last_link_id":116,"nodes":[{"id":11,"type":"DualCLIPLoader","pos":[48,288],"size":{"0":315,"1":106},"flags":{},"order":0,"mode":0,"outputs":[{"name":"CLIP","type":"CLIP","links":[10],"shape":3,"slot_index":0,"label":"CLIP"}],"properties":{"Node name for S&R":"DualCLIPLoader"},"widgets_values":["t5xxl_fp16.safetensors","clip_l.safetensors","flux"]},{"id":17,"type":"BasicScheduler","pos":[480,1008],"size":{"0":315,"1":106},"flags":{},"order":13,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":55,"slot_index":0,"label":"model"}],"outputs":[{"name":"SIGMAS","type":"SIGMAS","links":[20],"shape":3,"label":"SIGMAS"}],"properties":{"Node name for S&R":"BasicScheduler"},"widgets_values":["simple",20,1]},{"id":16,"type":"KSamplerSelect","pos":[480,912],"size":{"0":315,"1":58},"flags":{},"order":1,"mode":0,"outputs":[{"name":"SAMPLER","type":"SAMPLER","links":[19],"shape":3,"label":"SAMPLER"}],"properties":{"Node name for S&R":"KSamplerSelect"},"widgets_values":["euler"]},{"id":26,"type":"FluxGuidance","pos":[480,144],"size":{"0":317.4000244140625,"1":58},"flags":{},"order":12,"mode":0,"inputs":[{"name":"conditioning","type":"CONDITIONING","link":41,"label":"conditioning"}],"outputs":[{"name":"CONDITIONING","type":"CONDITIONING","links":[42],"shape":3,"slot_index":0,"label":"CONDITIONING"}],"properties":{"Node name for S&R":"FluxGuidance"},"widgets_values":[3.5],"color":"#233","bgcolor":"#355"},{"id":22,"type":"BasicGuider","pos":[576,48],"size":{"0":222.3482666015625,"1":46},"flags":{},"order":14,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":54,"slot_index":0,"label":"model"},{"name":"conditioning","type":"CONDITIONING","link":42,"slot_index":1,"label":"conditioning"}],"outputs":[{"name":"GUIDER","type":"GUIDER","links":[30],"shape":3,"slot_index":0,"label":"GUIDER"}],"properties":{"Node name for S&R":"BasicGuider"}},{"id":13,"type":"SamplerCustomAdvanced","pos":[864,192],"size":{"0":272.3617858886719,"1":124.53733825683594},"flags":{},"order":15,"mode":0,"inputs":[{"name":"noise","type":"NOISE","link":37,"slot_index":0,"label":"noise"},{"name":"guider","type":"GUIDER","link":30,"slot_index":1,"label":"guider"},{"name":"sampler","type":"SAMPLER","link":19,"slot_index":2,"label":"sampler"},{"name":"sigmas","type":"SIGMAS","link":20,"slot_index":3,"label":"sigmas"},{"name":"latent_image","type":"LATENT","link":116,"slot_index":4,"label":"latent_image"}],"outputs":[{"name":"output","type":"LATENT","links":[24],"shape":3,"slot_index":0,"label":"output"},{"name":"denoised_output","type":"LATENT","links":null,"shape":3,"label":"denoised_output"}],"properties":{"Node name for S&R":"SamplerCustomAdvanced"}},{"id":25,"type":"RandomNoise","pos":[480,768],"size":{"0":315,"1":82},"flags":{},"order":2,"mode":0,"outputs":[{"name":"NOISE","type":"NOISE","links":[37],"shape":3,"label":"NOISE"}],"properties":{"Node name for S&R":"RandomNoise"},"widgets_values":[219670278747233,"randomize"],"color":"#2a363b","bgcolor":"#3f5159"},{"id":8,"type":"VAEDecode","pos":[866,367],"size":{"0":210,"1":46},"flags":{},"order":16,"mode":0,"inputs":[{"name":"samples","type":"LATENT","link":24,"label":"samples"},{"name":"vae","type":"VAE","link":12,"label":"vae"}],"outputs":[{"name":"IMAGE","type":"IMAGE","links":[9],"slot_index":0,"label":"IMAGE"}],"properties":{"Node name for S&R":"VAEDecode"}},{"id":6,"type":"CLIPTextEncode","pos":[384,240],"size":{"0":422.84503173828125,"1":164.31304931640625},"flags":{},"order":9,"mode":0,"inputs":[{"name":"clip","type":"CLIP","link":10,"label":"clip"}],"outputs":[{"name":"CONDITIONING","type":"CONDITIONING","links":[41],"slot_index":0,"label":"CONDITIONING"}],"title":"CLIP Text Encode (Positive Prompt)","properties":{"Node name for S&R":"CLIPTextEncode"},"widgets_values":["cute anime girl with massive fluffy fennec ears and a big fluffy tail blonde messy long hair blue eyes wearing a maid outfit with a long black gold leaf pattern dress and a white apron mouth open holding a fancy black forest cake with candles on top in the kitchen of an old dark Victorian mansion lit by candlelight with a bright window to the foggy forest and very expensive stuff everywhere"],"color":"#232","bgcolor":"#353"},{"id":30,"type":"ModelSamplingFlux","pos":[480,1152],"size":{"0":315,"1":130},"flags":{},"order":11,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":56,"slot_index":0,"label":"model"},{"name":"width","type":"INT","link":115,"widget":{"name":"width"},"slot_index":1,"label":"width"},{"name":"height","type":"INT","link":114,"widget":{"name":"height"},"slot_index":2,"label":"height"}],"outputs":[{"name":"MODEL","type":"MODEL","links":[54,55],"shape":3,"slot_index":0,"label":"MODEL"}],"properties":{"Node name for S&R":"ModelSamplingFlux"},"widgets_values":[1.15,0.5,1024,1024]},{"id":27,"type":"EmptySD3LatentImage","pos":[480,624],"size":{"0":315,"1":106},"flags":{},"order":10,"mode":0,"inputs":[{"name":"width","type":"INT","link":112,"widget":{"name":"width"},"label":"width"},{"name":"height","type":"INT","link":113,"widget":{"name":"height"},"label":"height"}],"outputs":[{"name":"LATENT","type":"LATENT","links":[116],"shape":3,"slot_index":0,"label":"LATENT"}],"properties":{"Node name for S&R":"EmptySD3LatentImage"},"widgets_values":[1024,1024,1]},{"id":34,"type":"PrimitiveNode","pos":[432,480],"size":{"0":210,"1":82},"flags":{},"order":3,"mode":0,"outputs":[{"name":"INT","type":"INT","links":[112,115],"slot_index":0,"widget":{"name":"width"},"label":"INT"}],"title":"width","properties":{"Run widget replace on values":false},"widgets_values":[1024,"fixed"],"color":"#323","bgcolor":"#535"},{"id":35,"type":"PrimitiveNode","pos":[672,480],"size":{"0":210,"1":82},"flags":{},"order":4,"mode":0,"outputs":[{"name":"INT","type":"INT","links":[113,114],"widget":{"name":"height"},"slot_index":0,"label":"INT"}],"title":"height","properties":{"Run widget replace on values":false},"widgets_values":[1024,"fixed"],"color":"#323","bgcolor":"#535"},{"id":12,"type":"UNETLoader","pos":[48,144],"size":{"0":315,"1":82},"flags":{},"order":5,"mode":0,"outputs":[{"name":"MODEL","type":"MODEL","links":[56],"shape":3,"slot_index":0,"label":"MODEL"}],"properties":{"Node name for S&R":"UNETLoader"},"widgets_values":["flux1-dev.safetensors","default"],"color":"#223","bgcolor":"#335"},{"id":9,"type":"SaveImage","pos":[1155,196],"size":{"0":985.3012084960938,"1":1060.3828125},"flags":{},"order":17,"mode":0,"inputs":[{"name":"images","type":"IMAGE","link":9,"label":"images"}],"properties":{},"widgets_values":["ComfyUI"]},{"id":37,"type":"Note","pos":[480,1344],"size":{"0":314.99755859375,"1":117.98363494873047},"flags":{},"order":6,"mode":0,"properties":{"text":""},"widgets_values":["The reference sampling implementation auto adjusts the shift value based on the resolution, if you don't want this you can just bypass (CTRL-B) this ModelSamplingFlux node.\n"],"color":"#432","bgcolor":"#653"},{"id":10,"type":"VAELoader","pos":[48,432],"size":{"0":311.81634521484375,"1":60.429901123046875},"flags":{},"order":7,"mode":0,"outputs":[{"name":"VAE","type":"VAE","links":[12],"shape":3,"slot_index":0,"label":"VAE"}],"properties":{"Node name for S&R":"VAELoader"},"widgets_values":["ae.safetensors"]},{"id":28,"type":"Note","pos":[48,576],"size":{"0":336,"1":288},"flags":{},"order":8,"mode":0,"properties":{"text":""},"widgets_values":["If you get an error in any of the nodes above make sure the files are in the correct directories.\n\nSee the top of the examples page for the links : https://comfyanonymous.github.io/ComfyUI_examples/flux/\n\nflux1-dev.safetensors goes in: ComfyUI/models/unet/\n\nt5xxl_fp16.safetensors and clip_l.safetensors go in: ComfyUI/models/clip/\n\nae.safetensors goes in: ComfyUI/models/vae/\n\n\nTip: You can set the weight_dtype above to one of the fp8 types if you have memory issues."],"color":"#432","bgcolor":"#653"}],"links":[[9,8,0,9,0,"IMAGE"],[10,11,0,6,0,"CLIP"],[12,10,0,8,1,"VAE"],[19,16,0,13,2,"SAMPLER"],[20,17,0,13,3,"SIGMAS"],[24,13,0,8,0,"LATENT"],[30,22,0,13,1,"GUIDER"],[37,25,0,13,0,"NOISE"],[41,6,0,26,0,"CONDITIONING"],[42,26,0,22,1,"CONDITIONING"],[54,30,0,22,0,"MODEL"],[55,30,0,17,0,"MODEL"],[56,12,0,30,0,"MODEL"],[112,34,0,27,0,"INT"],[113,35,0,27,1,"INT"],[114,35,0,30,2,"INT"],[115,34,0,30,1,"INT"],[116,27,0,13,4,"LATENT"]],"groups":[],"config":{},"extra":{"ds":{"scale":1.1,"offset":[-0.17937541249087297,2.2890951150661545]},"groupNodes":{}},"version":0.4}FLUX.1 Schnell ComfyUI Workflow Example

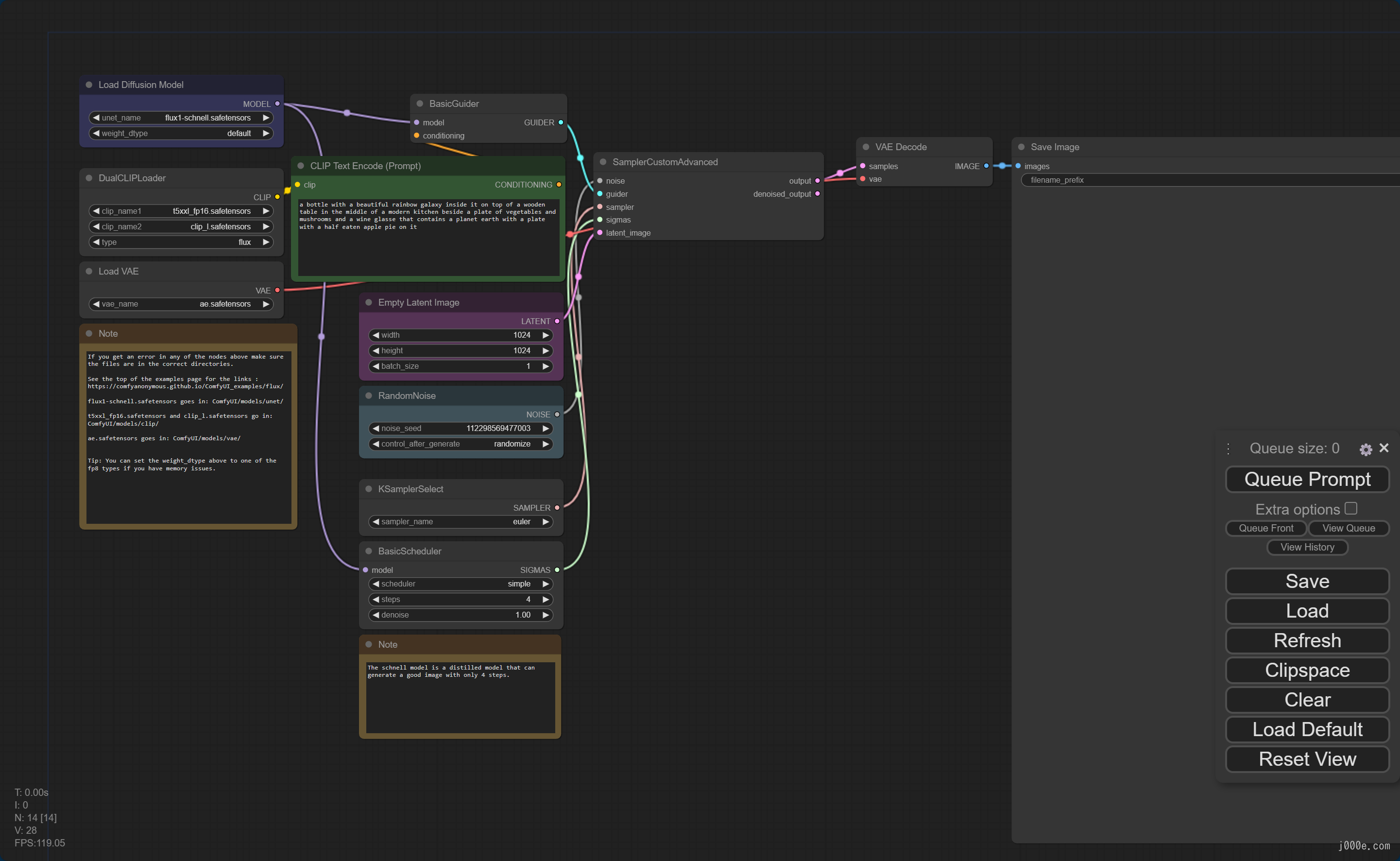

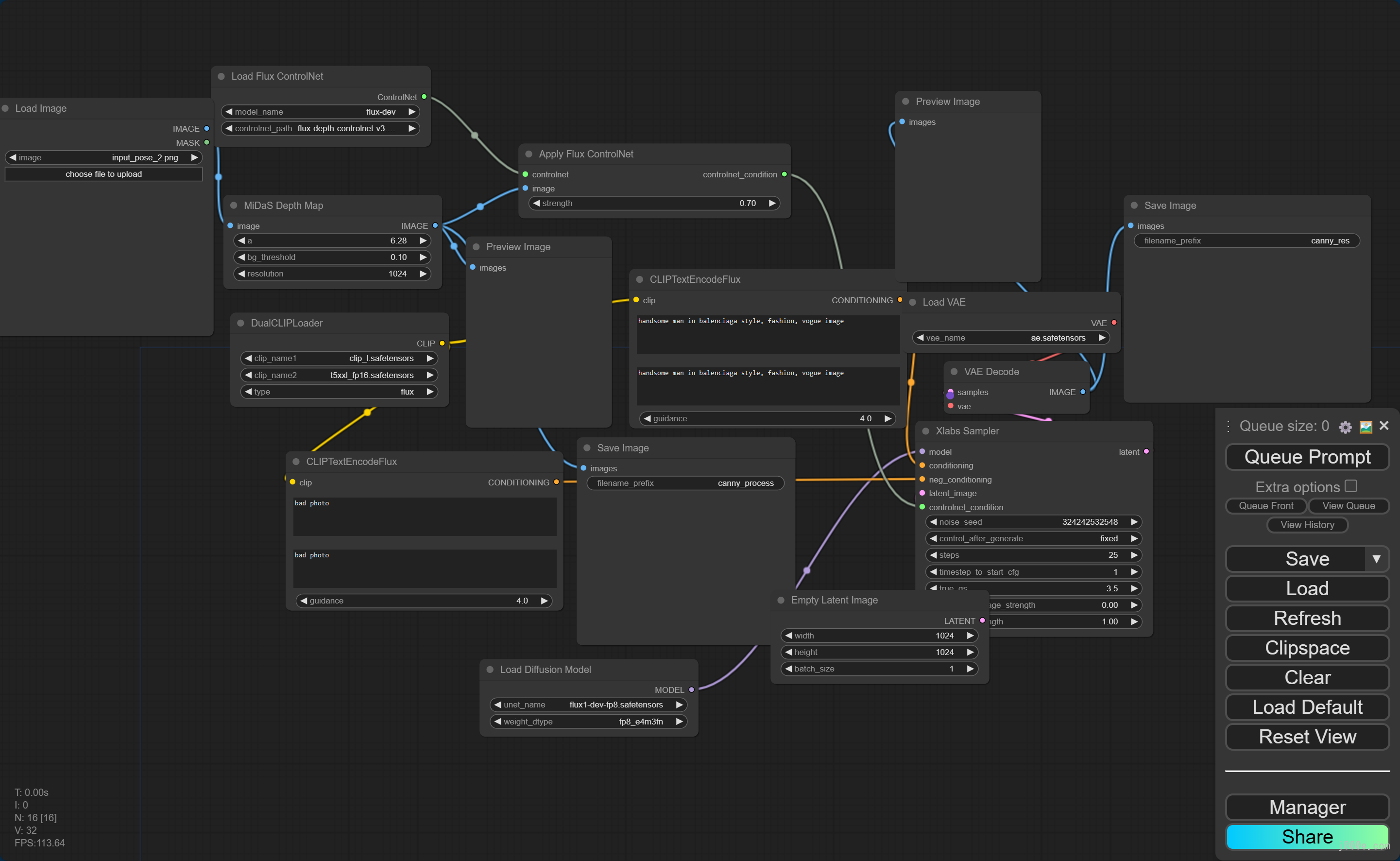

{"last_node_id":36,"last_link_id":58,"nodes":[{"id":33,"type":"CLIPTextEncode","pos":[390,400],"size":{"0":422.84503173828125,"1":164.31304931640625},"flags":{"collapsed":true},"order":4,"mode":0,"inputs":[{"name":"clip","type":"CLIP","link":54,"slot_index":0,"label":"clip"}],"outputs":[{"name":"CONDITIONING","type":"CONDITIONING","links":[55],"slot_index":0,"label":"CONDITIONING"}],"title":"CLIP Text Encode (Negative Prompt)","properties":{"Node name for S&R":"CLIPTextEncode"},"widgets_values":[""],"color":"#322","bgcolor":"#533"},{"id":27,"type":"EmptySD3LatentImage","pos":[471,455],"size":{"0":315,"1":106},"flags":{},"order":0,"mode":0,"outputs":[{"name":"LATENT","type":"LATENT","links":[51],"shape":3,"slot_index":0,"label":"LATENT"}],"properties":{"Node name for S&R":"EmptySD3LatentImage"},"widgets_values":[1024,1024,1],"color":"#323","bgcolor":"#535"},{"id":8,"type":"VAEDecode","pos":[1151,195],"size":{"0":210,"1":46},"flags":{},"order":6,"mode":0,"inputs":[{"name":"samples","type":"LATENT","link":52,"label":"samples"},{"name":"vae","type":"VAE","link":46,"label":"vae"}],"outputs":[{"name":"IMAGE","type":"IMAGE","links":[9],"slot_index":0,"label":"IMAGE"}],"properties":{"Node name for S&R":"VAEDecode"}},{"id":9,"type":"SaveImage","pos":[1375,194],"size":{"0":985.3012084960938,"1":1060.3828125},"flags":{},"order":7,"mode":0,"inputs":[{"name":"images","type":"IMAGE","link":9,"label":"images"}],"properties":{},"widgets_values":["ComfyUI"]},{"id":31,"type":"KSampler","pos":[816,192],"size":{"0":315,"1":262},"flags":{},"order":5,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":47,"label":"model"},{"name":"positive","type":"CONDITIONING","link":58,"label":"positive"},{"name":"negative","type":"CONDITIONING","link":55,"label":"negative"},{"name":"latent_image","type":"LATENT","link":51,"label":"latent_image"}],"outputs":[{"name":"LATENT","type":"LATENT","links":[52],"shape":3,"slot_index":0,"label":"LATENT"}],"properties":{"Node name for S&R":"KSampler"},"widgets_values":[173805153958730,"randomize",4,1,"euler","simple",1]},{"id":30,"type":"CheckpointLoaderSimple","pos":[48,192],"size":{"0":315,"1":98},"flags":{},"order":1,"mode":0,"outputs":[{"name":"MODEL","type":"MODEL","links":[47],"shape":3,"slot_index":0,"label":"MODEL"},{"name":"CLIP","type":"CLIP","links":[45,54],"shape":3,"slot_index":1,"label":"CLIP"},{"name":"VAE","type":"VAE","links":[46],"shape":3,"slot_index":2,"label":"VAE"}],"properties":{"Node name for S&R":"CheckpointLoaderSimple"},"widgets_values":["flux1-schnell-fp8.safetensors"]},{"id":6,"type":"CLIPTextEncode","pos":[384,192],"size":{"0":422.84503173828125,"1":164.31304931640625},"flags":{},"order":3,"mode":0,"inputs":[{"name":"clip","type":"CLIP","link":45,"label":"clip"}],"outputs":[{"name":"CONDITIONING","type":"CONDITIONING","links":[58],"slot_index":0,"label":"CONDITIONING"}],"title":"CLIP Text Encode (Positive Prompt)","properties":{"Node name for S&R":"CLIPTextEncode"},"widgets_values":["a bottle with a beautiful rainbow galaxy inside it on top of a wooden table in the middle of a modern kitchen beside a plate of vegetables and mushrooms and a wine glasse that contains a planet earth with a plate with a half eaten apple pie on it"],"color":"#232","bgcolor":"#353"},{"id":34,"type":"Note","pos":[831,501],"size":{"0":282.8617858886719,"1":164.08004760742188},"flags":{},"order":2,"mode":0,"properties":{"text":""},"widgets_values":["Note that Flux dev and schnell do not have any negative prompt so CFG should be set to 1.0. Setting CFG to 1.0 means the negative prompt is ignored.\n\nThe schnell model is a distilled model that can generate a good image with only 4 steps."],"color":"#432","bgcolor":"#653"}],"links":[[9,8,0,9,0,"IMAGE"],[45,30,1,6,0,"CLIP"],[46,30,2,8,1,"VAE"],[47,30,0,31,0,"MODEL"],[51,27,0,31,3,"LATENT"],[52,31,0,8,0,"LATENT"],[54,30,1,33,0,"CLIP"],[55,33,0,31,2,"CONDITIONING"],[58,6,0,31,1,"CONDITIONING"]],"groups":[],"config":{},"extra":{"ds":{"scale":1.1,"offset":[1.1666219579074508,1.8290357611967831]}},"version":0.4}Once the workflow is loaded, it looks like the image below:

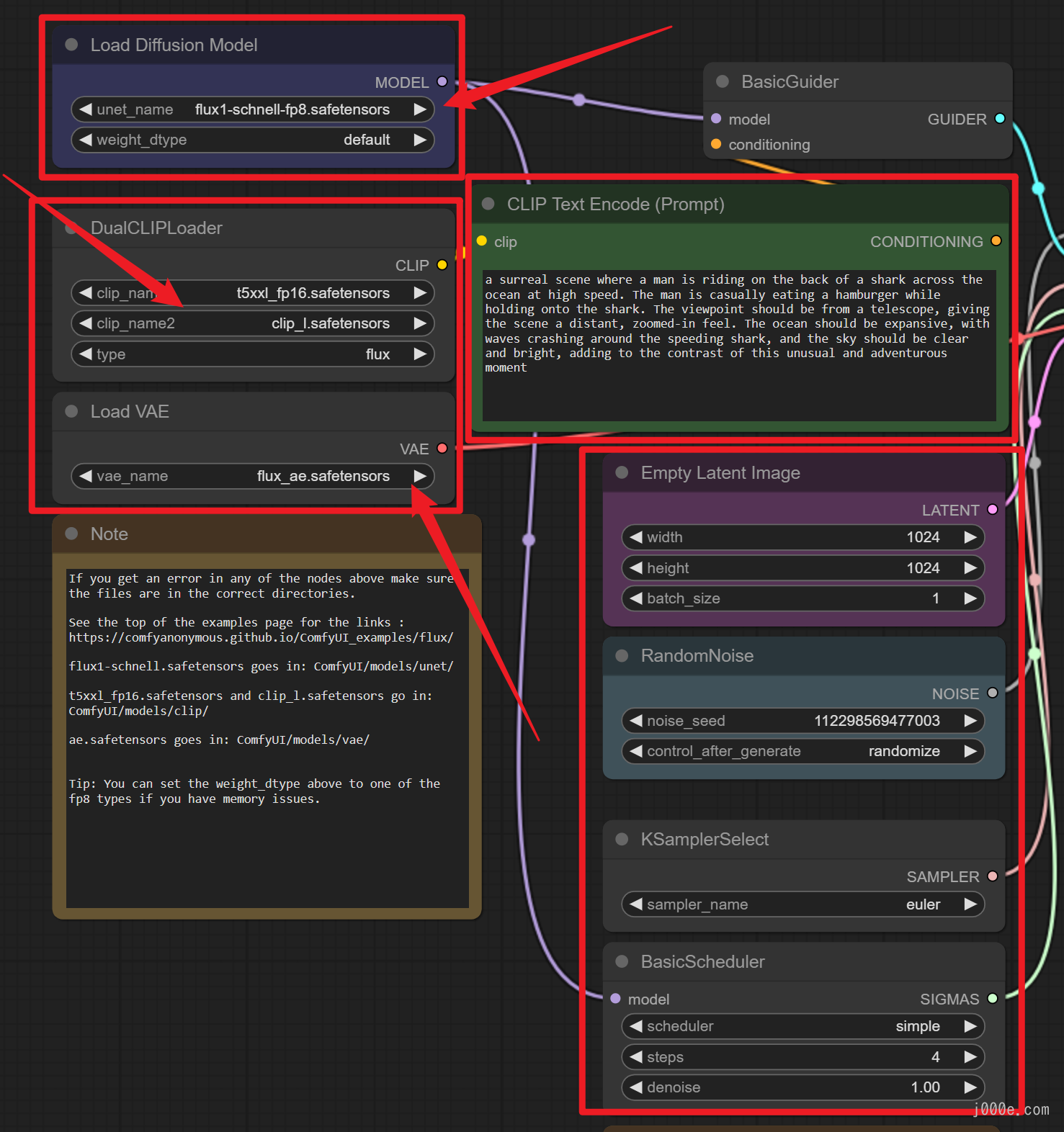

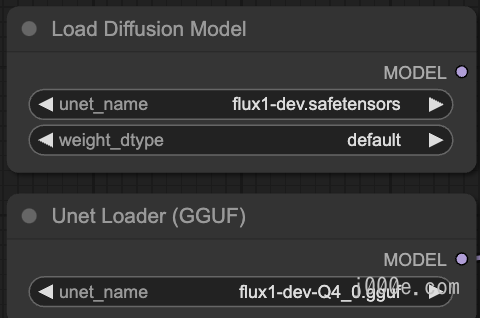

Please note that the configuration in the workflow template might differ from your actual setup. Carefully check and edit the model selection information in the Load Diffusion Model, DualCLIPLoader, and Load VAE sections on the left side of the image below to ensure you are using the versions you just downloaded.

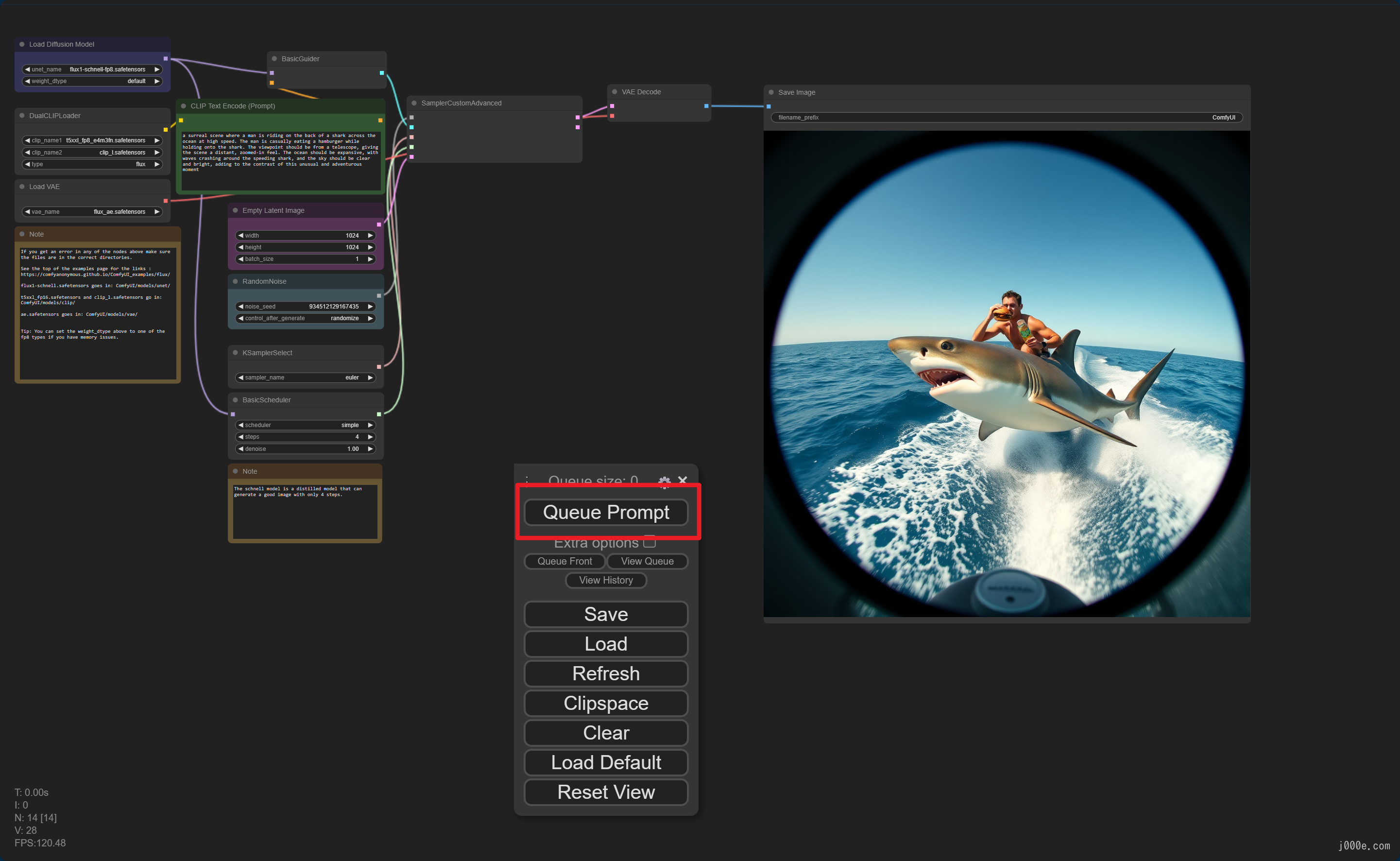

Enter your prompt in the green box on the right side and click Queue Prompt on the floating toolbar to start generating images. If there are any errors, check the configuration of the nodes that turn red to ensure they are correct.

At this point, you can use various versions of the FLUX models to generate images. An example of an image generated using the FLUX Schnell FP8 model is shown below:

LoRA (Advanced Usage)

Here is a website where you can experience the FLUX.1 LoRA model online:

https://huggingface.co/spaces/multimodalart/flux-lora-the-explorer

LoRA (Low-Rank Adaptation) is used in text-to-image generation to fine-tune large pre-trained models like Stable Diffusion with much less computational cost. By modifying only a small, low-rank subset of the model's parameters, LoRA enables the model to adapt to specific styles, subjects, or domains based on the provided text prompts without needing to retrain the entire model.

Suppose you have a pre-trained text-to-image model, and you want it to generate images in a specific artistic style, like "Van Gogh-like landscapes." Instead of retraining the whole model, which would be resource-intensive, you use LoRA to fine-tune the model on a smaller dataset of Van Gogh-style images paired with relevant text descriptions. This allows the model to learn the nuances of that style and apply it to new text prompts, generating images with the desired artistic characteristics.

Download the LoRA Model

FLUX.1 LoRA and other resources, you can download on:

Here, we choose the LoRA created by the XLabs-AI team:

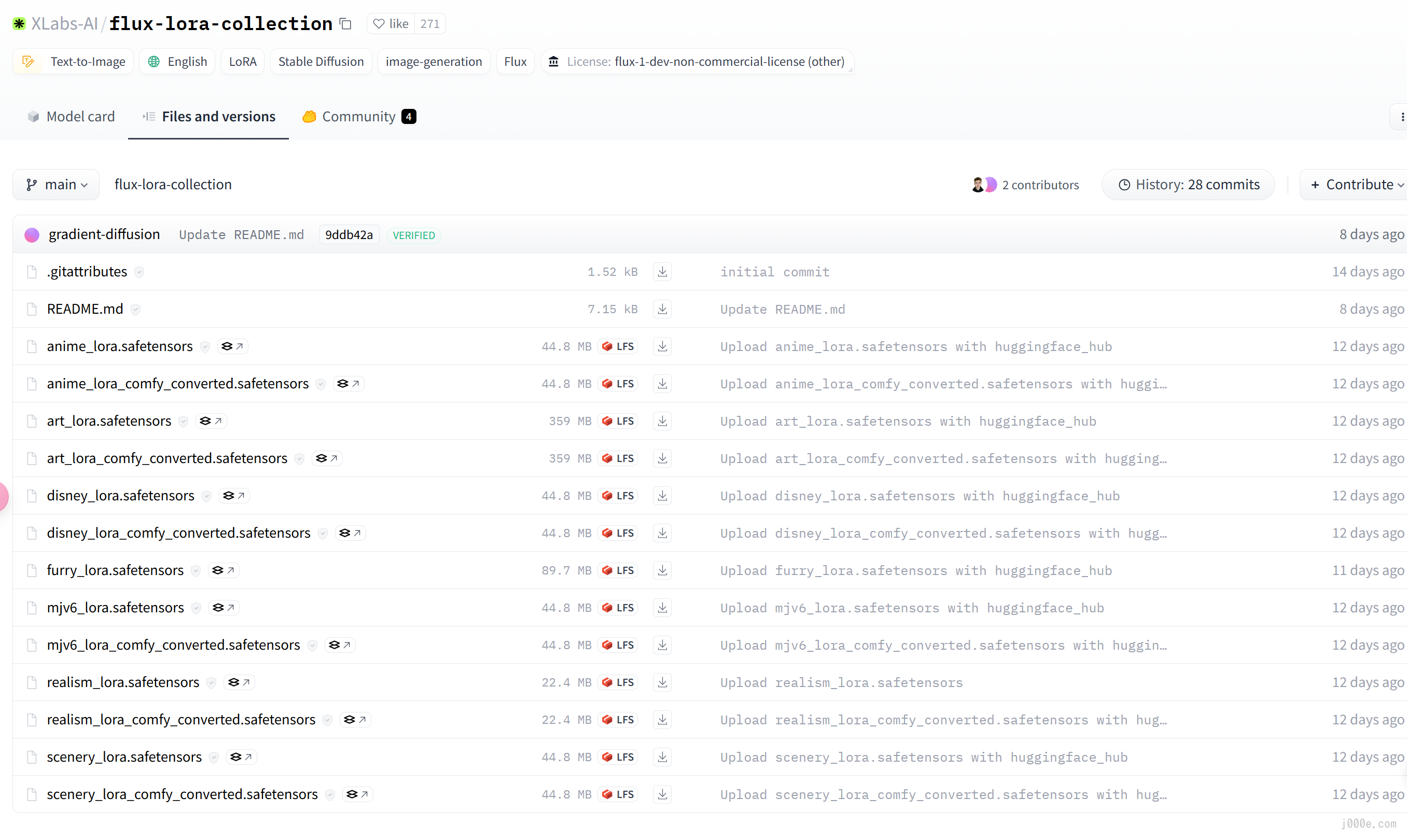

https://huggingface.co/XLabs-AI/flux-lora-collection/tree/main

Currently, XLabs has released 7 LoRA models for FLUX.1, including Anime, Art, Disney, MJV6, Plush, Realistic, and Scenery. Download the required LoRA model files with comfy_converted and place them in the ComfyUI\models\loras directory.

For LoRA models withoutcomfy_converted, you need to install thex-flux-comfyuiplugin and place them in theComfyUI/models/xlabs/lorasdirectory to use them.

LoRA Workflow

When using FLUX.1 LoRA in ComfyUI, there are also 2 different workflows available: one is based on the native workflow, where the main model is stored in the Unet folder; the other is a simplified workflow suitable for the fp8 model released by ComfyUI, where the main model is placed in the checkpoints folder.

Native Workflow:

{"last_node_id":89,"last_link_id":125,"nodes":[{"id":12,"type":"UNETLoader","pos":[44,101],"size":[326.5508155800454,82],"flags":{},"order":0,"mode":0,"outputs":[{"name":"MODEL","type":"MODEL","links":[107],"slot_index":0,"shape":3,"label":"MODEL"}],"properties":{"Node name for S&R":"UNETLoader"},"widgets_values":["flux1-dev-fp8-kijai.safetensors","fp8_e4m3fn"]},{"id":11,"type":"DualCLIPLoader","pos":[37,245],"size":[324.94479196498867,106.7058248015365],"flags":{},"order":1,"mode":0,"outputs":[{"name":"CLIP","type":"CLIP","links":[108],"slot_index":0,"shape":3,"label":"CLIP"}],"properties":{"Node name for S&R":"DualCLIPLoader"},"widgets_values":["t5xxl_fp8_e4m3fn.safetensors","clip_l.safetensors","flux"]},{"id":85,"type":"CR SDXL Aspect Ratio","pos":[34,408],"size":{"0":329.5428161621094,"1":278.98809814453125},"flags":{},"order":2,"mode":0,"outputs":[{"name":"width","type":"INT","links":[122],"slot_index":0,"shape":3,"label":"width"},{"name":"height","type":"INT","links":[123],"slot_index":1,"shape":3,"label":"height"},{"name":"upscale_factor","type":"FLOAT","links":null,"shape":3,"label":"upscale_factor"},{"name":"batch_size","type":"INT","links":null,"shape":3,"label":"batch_size"},{"name":"empty_latent","type":"LATENT","links":[124],"slot_index":4,"shape":3,"label":"empty_latent"},{"name":"show_help","type":"STRING","links":null,"shape":3,"label":"show_help"}],"properties":{"Node name for S&R":"CR SDXL Aspect Ratio"},"widgets_values":[512,768,"custom","Off",1,1]},{"id":25,"type":"RandomNoise","pos":[1022,95],"size":{"0":285.3142395019531,"1":83.42333221435547},"flags":{},"order":3,"mode":0,"outputs":[{"name":"NOISE","type":"NOISE","links":[37],"shape":3,"label":"NOISE"}],"properties":{"Node name for S&R":"RandomNoise"},"widgets_values":[599336083703690,"randomize"]},{"id":22,"type":"BasicGuider","pos":[1028,225],"size":{"0":260,"1":60},"flags":{"collapsed":false},"order":12,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":94,"slot_index":0,"label":"model"},{"name":"conditioning","type":"CONDITIONING","link":87,"slot_index":1,"label":"conditioning"}],"outputs":[{"name":"GUIDER","type":"GUIDER","links":[30],"slot_index":0,"shape":3,"label":"GUIDER"}],"properties":{"Node name for S&R":"BasicGuider"}},{"id":16,"type":"KSamplerSelect","pos":[1033,332],"size":{"0":260,"1":60},"flags":{},"order":4,"mode":0,"outputs":[{"name":"SAMPLER","type":"SAMPLER","links":[19],"shape":3,"label":"SAMPLER"}],"properties":{"Node name for S&R":"KSamplerSelect"},"widgets_values":["dpmpp_2m"]},{"id":10,"type":"VAELoader","pos":[1338,102],"size":{"0":230,"1":60},"flags":{},"order":5,"mode":0,"outputs":[{"name":"VAE","type":"VAE","links":[12],"slot_index":0,"shape":3,"label":"VAE"}],"properties":{"Node name for S&R":"VAELoader"},"widgets_values":["flux_ae.sft"]},{"id":88,"type":"Reroute","pos":[509,400],"size":[75,26],"flags":{},"order":8,"mode":0,"inputs":[{"name":"","type":"*","link":124,"label":""}],"outputs":[{"name":"","type":"LATENT","links":[125],"slot_index":0,"label":""}],"properties":{"showOutputText":false,"horizontal":false}},{"id":13,"type":"SamplerCustomAdvanced","pos":[1339,223],"size":{"0":240,"1":326},"flags":{},"order":13,"mode":0,"inputs":[{"name":"noise","type":"NOISE","link":37,"slot_index":0,"label":"noise"},{"name":"guider","type":"GUIDER","link":30,"slot_index":1,"label":"guider"},{"name":"sampler","type":"SAMPLER","link":19,"slot_index":2,"label":"sampler"},{"name":"sigmas","type":"SIGMAS","link":20,"slot_index":3,"label":"sigmas"},{"name":"latent_image","type":"LATENT","link":125,"slot_index":4,"label":"latent_image"}],"outputs":[{"name":"output","type":"LATENT","links":[24],"slot_index":0,"shape":3,"label":"output"},{"name":"denoised_output","type":"LATENT","links":null,"shape":3,"label":"denoised_output"}],"properties":{"Node name for S&R":"SamplerCustomAdvanced"}},{"id":8,"type":"VAEDecode","pos":[1367,603],"size":{"0":140,"1":50},"flags":{},"order":14,"mode":0,"inputs":[{"name":"samples","type":"LATENT","link":24,"label":"samples"},{"name":"vae","type":"VAE","link":12,"label":"vae"}],"outputs":[{"name":"IMAGE","type":"IMAGE","links":[9],"slot_index":0,"label":"IMAGE"}],"properties":{"Node name for S&R":"VAEDecode"}},{"id":9,"type":"SaveImage","pos":[484,480],"size":[438.85816905239017,570.8073824148398],"flags":{},"order":15,"mode":0,"inputs":[{"name":"images","type":"IMAGE","link":9,"label":"images"}],"properties":{"Node name for S&R":"SaveImage"},"widgets_values":["MarkuryFLUX"]},{"id":60,"type":"FluxGuidance","pos":[750,309],"size":{"0":211.60000610351562,"1":60},"flags":{},"order":10,"mode":0,"inputs":[{"name":"conditioning","type":"CONDITIONING","link":86,"label":"conditioning"}],"outputs":[{"name":"CONDITIONING","type":"CONDITIONING","links":[87],"slot_index":0,"shape":3,"label":"CONDITIONING"}],"properties":{"Node name for S&R":"FluxGuidance"},"widgets_values":[3.5],"color":"#323","bgcolor":"#535"},{"id":61,"type":"ModelSamplingFlux","pos":[746,116],"size":{"0":240,"1":122},"flags":{},"order":9,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":106,"label":"model"},{"name":"width","type":"INT","link":122,"widget":{"name":"width"},"label":"width"},{"name":"height","type":"INT","link":123,"widget":{"name":"height"},"label":"height"}],"outputs":[{"name":"MODEL","type":"MODEL","links":[93,94],"slot_index":0,"shape":3,"label":"MODEL"}],"properties":{"Node name for S&R":"ModelSamplingFlux"},"widgets_values":[1.15,0.5,1024,1024]},{"id":17,"type":"BasicScheduler","pos":[998,477],"size":{"0":260,"1":110},"flags":{},"order":11,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":93,"slot_index":0,"label":"model"}],"outputs":[{"name":"SIGMAS","type":"SIGMAS","links":[20],"shape":3,"label":"SIGMAS"}],"properties":{"Node name for S&R":"BasicScheduler"},"widgets_values":["sgm_uniform",25,1]},{"id":72,"type":"LoraLoaderModelOnly","pos":[407,109],"size":{"0":310,"1":82},"flags":{},"order":6,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":107,"label":"model"}],"outputs":[{"name":"MODEL","type":"MODEL","links":[106],"slot_index":0,"shape":3,"label":"MODEL"}],"properties":{"Node name for S&R":"LoraLoaderModelOnly"},"widgets_values":["flux_realism_lora.safetensors",0.6]},{"id":6,"type":"CLIPTextEncode","pos":[411,269],"size":[294.2174415566078,103.19063606418729],"flags":{"collapsed":false},"order":7,"mode":0,"inputs":[{"name":"clip","type":"CLIP","link":108,"label":"clip"}],"outputs":[{"name":"CONDITIONING","type":"CONDITIONING","links":[86],"slot_index":0,"label":"CONDITIONING"}],"properties":{"Node name for S&R":"CLIPTextEncode"},"widgets_values":["a dog in the park, in the style of TOK a trtcrd, tarot style"],"color":"#232","bgcolor":"#353"}],"links":[[9,8,0,9,0,"IMAGE"],[12,10,0,8,1,"VAE"],[19,16,0,13,2,"SAMPLER"],[20,17,0,13,3,"SIGMAS"],[24,13,0,8,0,"LATENT"],[30,22,0,13,1,"GUIDER"],[37,25,0,13,0,"NOISE"],[86,6,0,60,0,"CONDITIONING"],[87,60,0,22,1,"CONDITIONING"],[93,61,0,17,0,"MODEL"],[94,61,0,22,0,"MODEL"],[106,72,0,61,0,"MODEL"],[107,12,0,72,0,"MODEL"],[108,11,0,6,0,"CLIP"],[122,85,0,61,1,"INT"],[123,85,1,61,2,"INT"],[124,85,4,88,0,"*"],[125,88,0,13,4,"LATENT"]],"groups":[],"config":{},"extra":{"ds":{"scale":1.1,"offset":[259.026128468271,8.068685322077368]}},"version":0.4}

This workflow requires the use of https://github.com/pythongosssss/ComfyUI-Custom-Scripts.

Clone the repository: git clone https://github.com/pythongosssss/ComfyUI-Custom-Scripts.git to your ComfyUI custom_nodes directory. The script will then automatically install all custom scripts and nodes. It will attempt to use symlinks and junctions to avoid having to copy files and keep them up to date.

After cloning, restart ComfyUI for the changes to take effect.

Simplified Workflow:

{"last_node_id":20,"last_link_id":23,"nodes":[{"id":10,"type":"CLIPTextEncode","pos":[98,-17],"size":{"0":285.6000061035156,"1":135.63133239746094},"flags":{},"order":5,"mode":0,"inputs":[{"name":"clip","type":"CLIP","link":22,"label":"clip"}],"outputs":[{"name":"CONDITIONING","type":"CONDITIONING","links":[19],"slot_index":0,"label":"CONDITIONING"}],"title":"CLIP Text Encode (Positive Prompt)","properties":{"Node name for S&R":"CLIPTextEncode"},"widgets_values":["a coca cola can \"sacred elixir\" arcana in the style of TOK a trtcrd, tarot style"],"color":"#232","bgcolor":"#353"},{"id":11,"type":"VAEDecode","pos":[882,-390],"size":{"0":210,"1":46},"flags":{},"order":8,"mode":0,"inputs":[{"name":"samples","type":"LATENT","link":11,"label":"samples"},{"name":"vae","type":"VAE","link":12,"label":"vae"}],"outputs":[{"name":"IMAGE","type":"IMAGE","links":[13],"slot_index":0,"label":"IMAGE"}],"properties":{"Node name for S&R":"VAEDecode"}},{"id":12,"type":"SaveImage","pos":[751,-266],"size":{"0":377.812744140625,"1":626.8323974609375},"flags":{},"order":9,"mode":0,"inputs":[{"name":"images","type":"IMAGE","link":13,"label":"images"}],"properties":{},"widgets_values":["ComfyUI"]},{"id":13,"type":"EmptySD3LatentImage","pos":[468,-273],"size":{"0":218.44395446777344,"1":106},"flags":{},"order":0,"mode":0,"outputs":[{"name":"LATENT","type":"LATENT","links":[17],"slot_index":0,"shape":3,"label":"LATENT"}],"properties":{"Node name for S&R":"EmptySD3LatentImage"},"widgets_values":[512,768,1],"color":"#323","bgcolor":"#535"},{"id":14,"type":"CheckpointLoaderSimple","pos":[102,-386],"size":{"0":307.93389892578125,"1":98},"flags":{},"order":1,"mode":0,"outputs":[{"name":"MODEL","type":"MODEL","links":[20],"slot_index":0,"shape":3,"label":"MODEL"},{"name":"CLIP","type":"CLIP","links":[18,23],"slot_index":1,"shape":3,"label":"CLIP"},{"name":"VAE","type":"VAE","links":[12],"slot_index":2,"shape":3,"label":"VAE"}],"properties":{"Node name for S&R":"CheckpointLoaderSimple"},"widgets_values":["flux1-dev-fp8.safetensors"]},{"id":15,"type":"KSampler","pos":[438,-108],"size":{"0":272.14605712890625,"1":473.0747985839844},"flags":{},"order":7,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":21,"label":"model"},{"name":"positive","type":"CONDITIONING","link":15,"label":"positive"},{"name":"negative","type":"CONDITIONING","link":16,"label":"negative"},{"name":"latent_image","type":"LATENT","link":17,"label":"latent_image"}],"outputs":[{"name":"LATENT","type":"LATENT","links":[11],"slot_index":0,"shape":3,"label":"LATENT"}],"properties":{"Node name for S&R":"KSampler"},"widgets_values":[1096960124364403,"randomize",20,1,"euler","simple",1]},{"id":16,"type":"CLIPTextEncode","pos":[103,167],"size":{"0":422.84503173828125,"1":164.31304931640625},"flags":{"collapsed":true},"order":3,"mode":0,"inputs":[{"name":"clip","type":"CLIP","link":18,"slot_index":0,"label":"clip"}],"outputs":[{"name":"CONDITIONING","type":"CONDITIONING","links":[16],"slot_index":0,"label":"CONDITIONING"}],"title":"CLIP Text Encode (Negative Prompt)","properties":{"Node name for S&R":"CLIPTextEncode"},"widgets_values":[""],"color":"#322","bgcolor":"#533"},{"id":17,"type":"Note","pos":[103,227],"size":{"0":285.57025146484375,"1":116.68089294433594},"flags":{},"order":2,"mode":0,"properties":{"text":""},"widgets_values":["Note that Flux dev and schnell do not have any negative prompt so CFG should be set to 1.0. Setting CFG to 1.0 means the negative prompt is ignored."],"color":"#432","bgcolor":"#653"},{"id":18,"type":"FluxGuidance","pos":[475,-393],"size":{"0":211.60000610351562,"1":58},"flags":{},"order":6,"mode":0,"inputs":[{"name":"conditioning","type":"CONDITIONING","link":19,"label":"conditioning"}],"outputs":[{"name":"CONDITIONING","type":"CONDITIONING","links":[15],"slot_index":0,"shape":3,"label":"CONDITIONING"}],"properties":{"Node name for S&R":"FluxGuidance"},"widgets_values":[3.5]},{"id":19,"type":"LoraLoader|pysssss","pos":[92,-220],"size":{"0":324.5400390625,"1":150},"flags":{},"order":4,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":20,"label":"model"},{"name":"clip","type":"CLIP","link":23,"label":"clip"}],"outputs":[{"name":"MODEL","type":"MODEL","links":[21],"slot_index":0,"shape":3,"label":"MODEL"},{"name":"CLIP","type":"CLIP","links":[22],"slot_index":1,"shape":3,"label":"CLIP"}],"properties":{"Node name for S&R":"LoraLoader|pysssss"},"widgets_values":[{"content":"flux_tarot_v1_lora.safetensors","image":null},1,1,"[none]"]}],"links":[[11,15,0,11,0,"LATENT"],[12,14,2,11,1,"VAE"],[13,11,0,12,0,"IMAGE"],[15,18,0,15,1,"CONDITIONING"],[16,16,0,15,2,"CONDITIONING"],[17,13,0,15,3,"LATENT"],[18,14,1,16,0,"CLIP"],[19,10,0,18,0,"CONDITIONING"],[20,14,0,19,0,"MODEL"],[21,19,0,15,0,"MODEL"],[22,19,1,10,0,"CLIP"],[23,14,1,19,1,"CLIP"]],"groups":[],"config":{},"extra":{"ds":{"scale":1.1,"offset":[349.4297446644178,525.8645990478523]}},"version":0.4}

This workflow requires the use of https://github.com/Suzie1/ComfyUI_Comfyroll_CustomNodes.

Clone the repository: git clone https://github.com/Suzie1/ComfyUI_Comfyroll_CustomNodes.git to your ComfyUI custom_nodes directory.

Restart ComfyUI.

Following the previous steps, save the code as a json file and import it into ComfyUI to use.

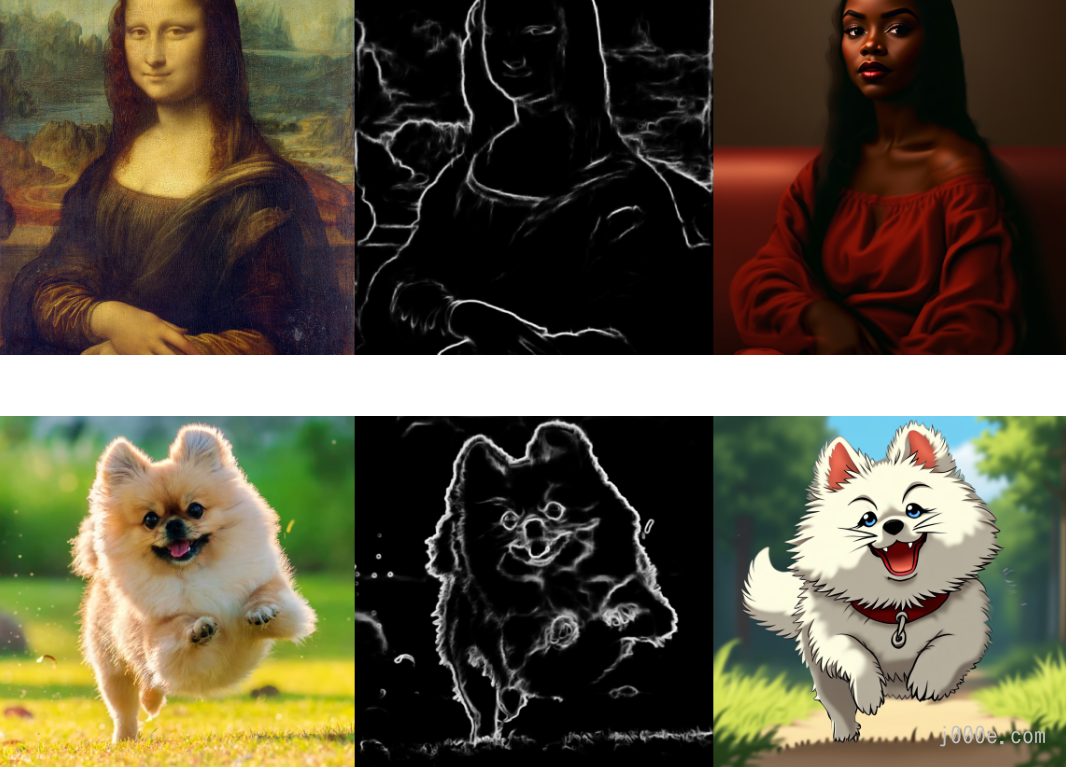

Here, we use the native workflow for testing, and the results are as follows:

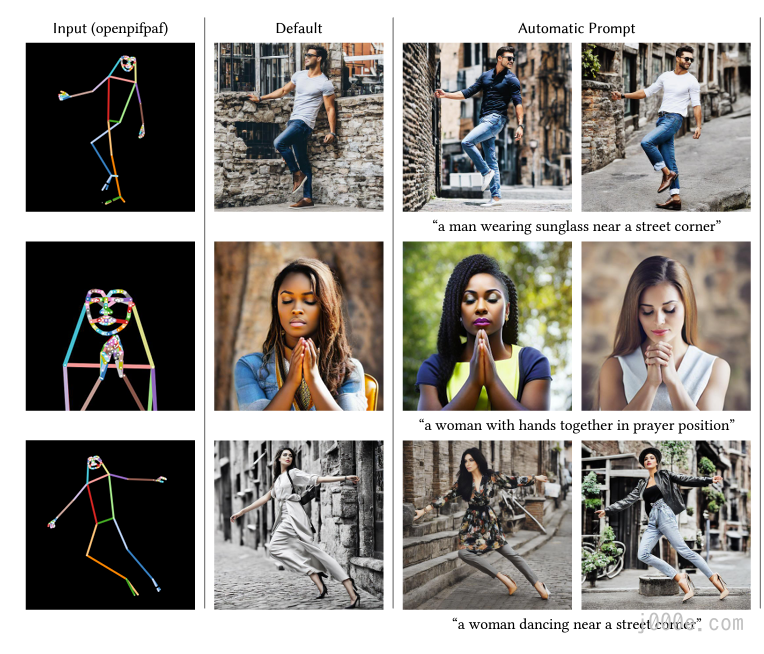

ControlNet (Advanced Usage)

ControlNet is used to give users more precise control over the image generation process, particularly when using models like Stable Diffusion. For example, if a user wants to generate an image based on a text prompt but also wants to ensure that the generated image follows a specific structure or outline (like a particular pose or shape), ControlNet allows the integration of that structure into the model’s decision-making process. This makes it possible to create images that not only match the text description but also adhere to specific visual constraints, such as the silhouette of a character or the layout of a scene.

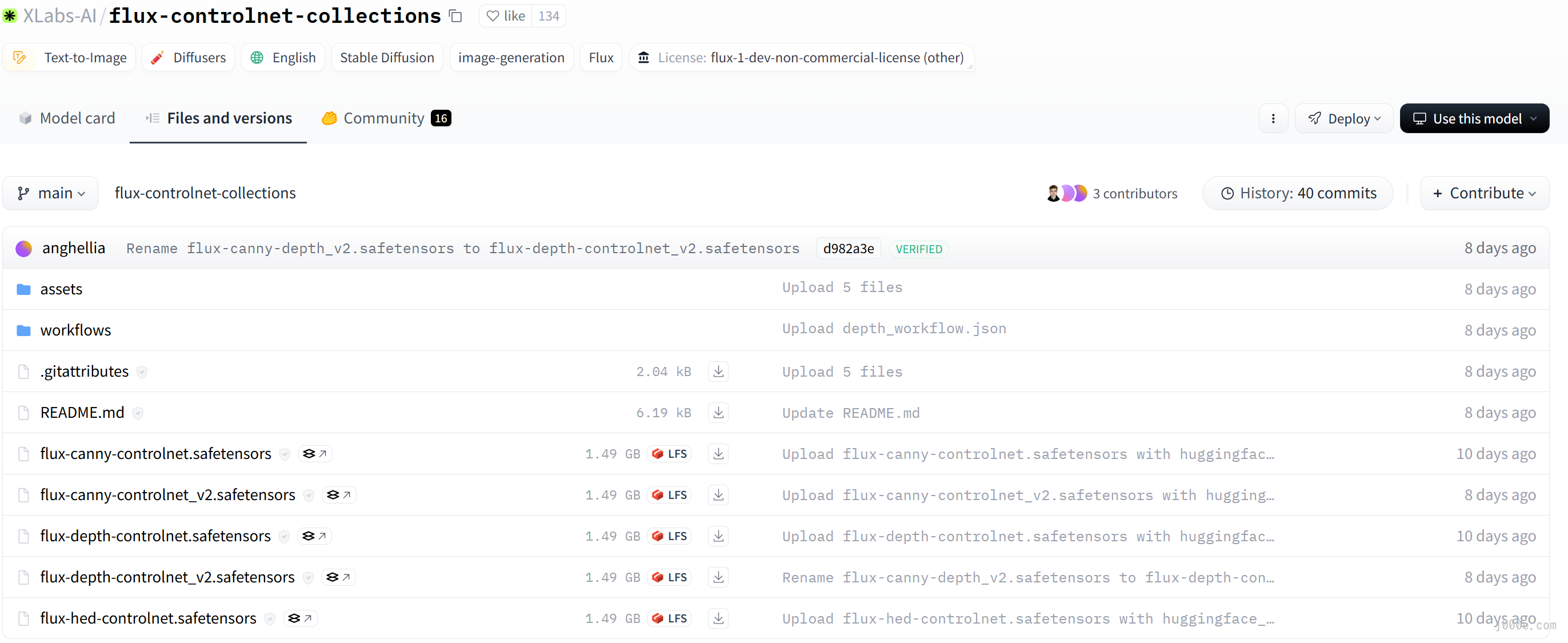

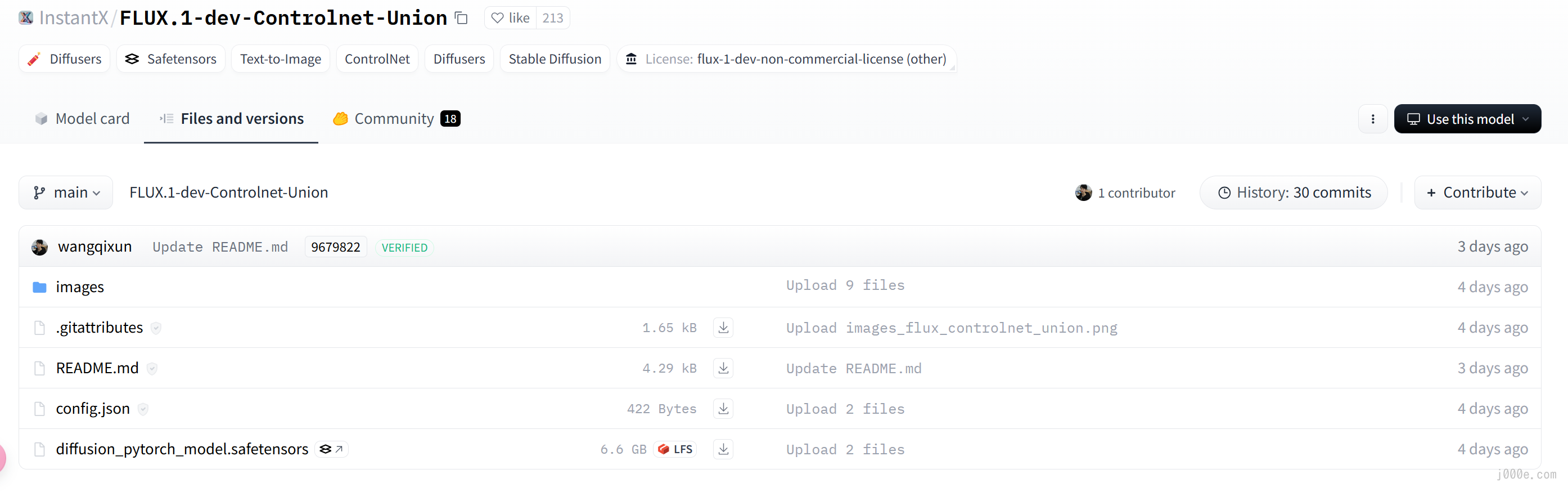

Download ControlNet Model

Currently, there are two teams in the open-source community that have trained ControlNet models suitable for FLUX.1. These models are compatible with both the official FLUX.1 and fp8 models. They also have higher VRAM requirements, needing 12GB or more to generate smoothly.

FLUX.1 ControlNet Collections:

https://huggingface.co/XLabs-AI/flux-controlnet-collections

InstanX FLUX.1 Controlnet Union :

https://huggingface.co/InstantX/FLUX.1-dev-Controlnet-Union-alpha

Here, we use the ControlNet developed by XLabs as an example. First, you need to install the dependencies:

- Go to

ComfyUI/custom_nodes - Clone this repo:

git clone https://github.com/XLabs-AI/x-flux-comfyui.git - Go to

ComfyUI/custom_nodes/x-flux-comfyui/and runpython setup.py - Restart ComfyUI

After the first launch, the ComfyUI/models/xlabs/loras and ComfyUI/models/xlabs/controlnets folders will be created automatically.

So, to use LoRA or ControlNet, just put the models in these folders.

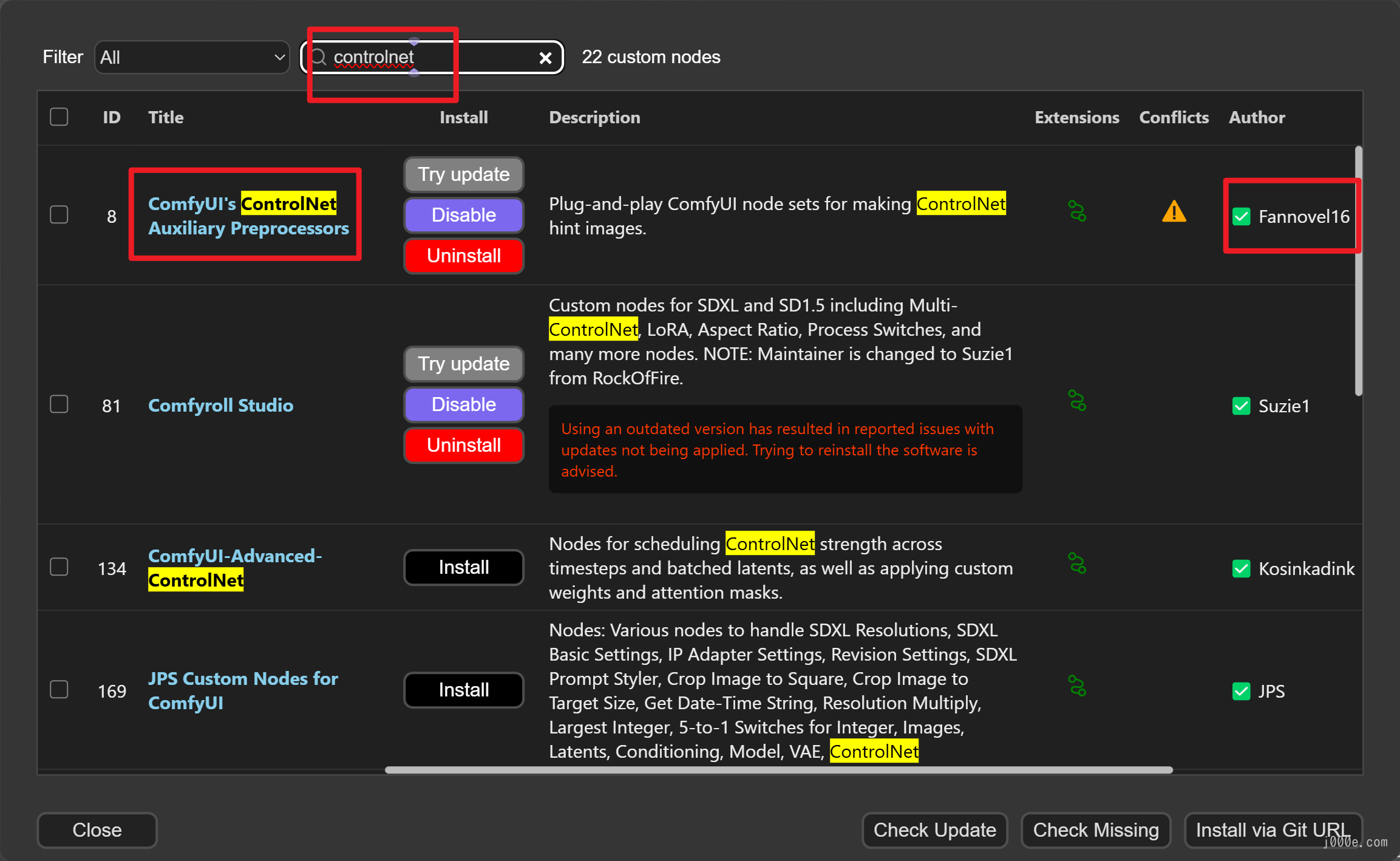

Next, we need to install ComfyUI-Manager. Similarly, go to ComfyUI/custom_nodes and run git clone https://github.com/ltdrdata/ComfyUI-Manager.git, then restart ComfyUI.

Click "Manager" button on main menu

If you click on 'Install Custom Nodes' or 'Install Models', an installer dialog will open.

Click on the first Custom Nodes Manager in the middle column, search for "ControlNet" and you will see the comfyui_controlnet_aux project by the author Fannovel16. Click to install it. After the installation is complete, click restart. The dependency installation will run in the background, and once completed, ComfyUI will open a new browser tab.

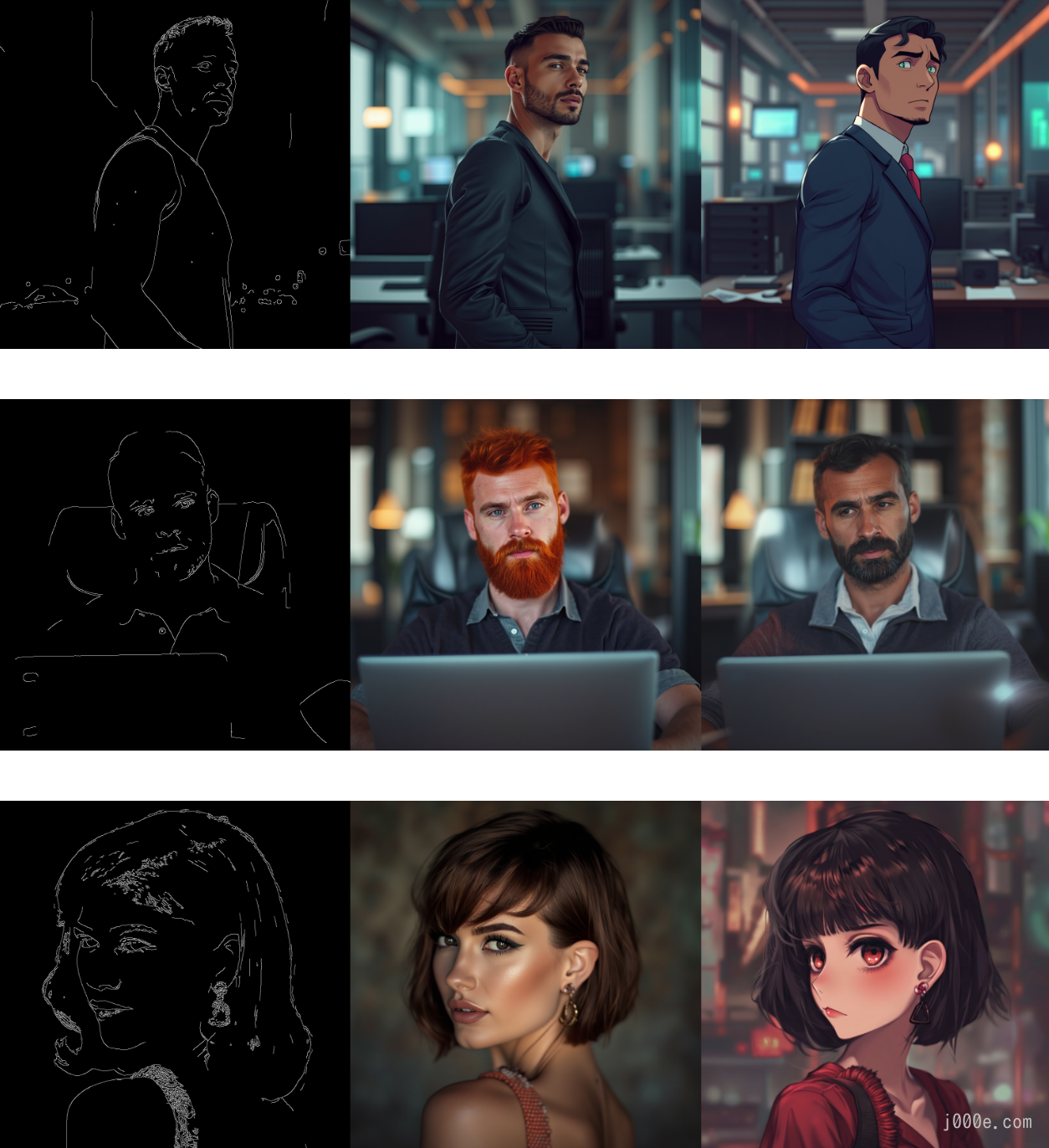

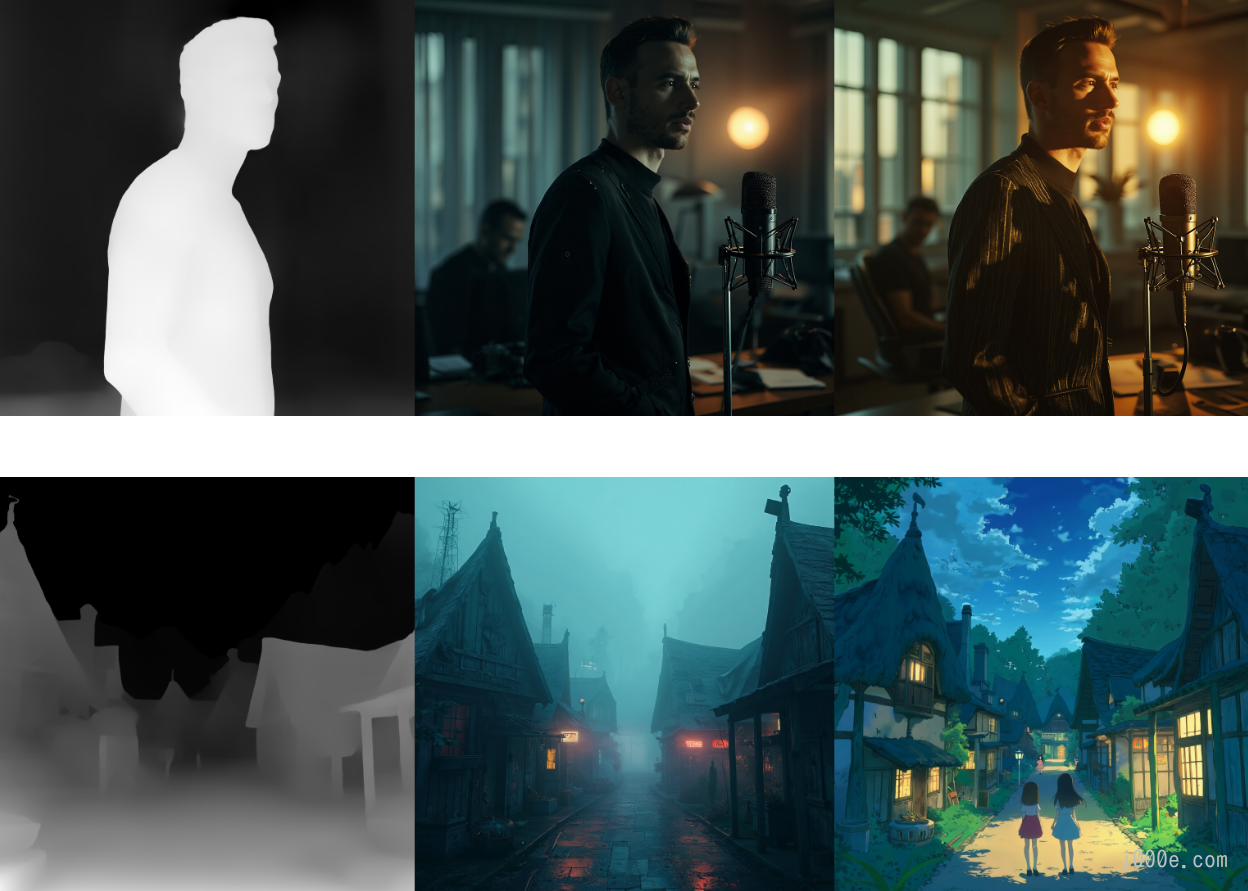

The Differences Between Canny, Depth, and HED ControlNet

Canny ControlNet (version 2)

Depth ControlNet (version 2)

HED ControlNet (version 1)

ControlNet Workflow

You can find the XLabs ControlNet workflow in ComfyUI\custom_nodes\x-flux-comfyui\workflows or at the following link:

https://github.com/XLabs-AI/x-flux-comfyui/tree/main/workflows

Example using Canny:

Low memory mode

You can launch FLUX.1 utilizing 12GB VRAM memory usage.

- Follow installation as described in repo https://github.com/city96/ComfyUI-GGUF

- Use flux1-dev-Q4_0.gguf from repo https://github.com/city96/ComfyUI-GGUF

- Launch ComfyUI with parameters:

python3 main.py --lowvram --preview-method auto --use-split-cross-attentionIn our workflows, replace "Load Diffusion Model" node with "Unet Loader (GGUF)"

FLUX.1 LoRA Online Training Tool

Replicate offers a training tool called "ostris/flux-dev-lora-trainer," which allows you to train your own Lora-style model with a minimum of just 10 images. You can give it a try.

- Price: Trainings for this model run on Nvidia H100 GPU hardware, which costs $0.001528 per second.

- How to Train: Read this document

- License: All FLUX.1-Dev LoRAs have the same license as the original base mode for FLUX.1-dev

Conclusion

In conclusion, this comprehensive guide to FLUX.1 provides users with the tools and knowledge necessary to harness the power of this cutting-edge image generation model. From its installation and setup through ComfyUI to advanced usage with LoRA and ControlNet, this guide has covered every aspect required to leverage FLUX.1 effectively. The model's versatility in generating high-quality images, along with its open-source availability, makes it a valuable asset for artists, developers, and AI enthusiasts alike. Whether you're creating intricate visuals or fine-tuning the model to suit specific needs, FLUX.1 stands out as a robust and accessible choice in the ever-evolving landscape of AI-generated art. With continued support from the community and advancements in model training, FLUX.1 is set to remain a benchmark for high-quality, customizable image generation.